HDFS HA 集群搭建:

DN(DataNode):3个;NN(NameNode):2;ZK(ZooKeeper):3(大于1的奇数个);ZKFC:和NN在同一台机器;JN:3;RM(ResourceManager):1;DM(DataManager):3个;与DN在同一台,就近原则

√表示在该机器上有该进程。

| NN | DN | ZK | ZKFC | JN | RM | DM | |

| Node1 | √ | √ | √ | √ | |||

| Node2 | √ | √ | √ | √ | √ | √ | |

| Node3 | √ | √ | √ | √ | |||

| Node4 | √ | √ | √ |

1.解压 hadoop-2.5.2.tar.gz

[hadoop@node1 software]$ tar -zxvf hadoop-2.5.2.tar.gz

其中 -zxvf 含义如下:

-z, gzip : 对归档文件使用 gzip 压缩

-x, --extract, --get : 释放归档文件中文件及目录

-v, --verbose : 显示命令整个执行过程

-f, --file=ARCHIVE : 指定 (将要创建或已存在的) 归档文件名

这里注意,我们的环境为CentOS7 64位系统,这里的tar包也需要为64位,可以使用如下方法查看hadoop tar包是32位还是64位:

/hadoop-2.5.2/lib/native[hadoop@node1 native]$ lslibhadoop.a libhadoop.so libhadooputils.a libhdfs.solibhadooppipes.a libhadoop.so.1.0.0 libhdfs.a libhdfs.so.0.0.0

[hadoop@node1 native]$ file libhadoop.so.1.0.0 libhadoop.so.1.0.0: ELF 64-bit LSB shared object, x86-64, version 1 (SYSV), dynamically linked, BuildID[sha1]=29e15e4c9d9840a7b96b5af3e5732e5935d91847, not stripped

2.进入hadoop解压后的目录修改hadoop-env.sh,主要修改JAVA_HOME

[hadoop@node1 hadoop]$ echo $JAVA_HOME /usr/java/jdk1.7.0_75[hadoop@node1 hadoop]$ vim hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.7.0_75

2. 修改hdfs-site.xml,可以参照官档

dfs.nameservices mycluster dfs.ha.namenodes.mycluster nn1,nn2 dfs.namenode.rpc-address.mycluster.nn1 node1.example.com:8020 dfs.namenode.rpc-address.mycluster.nn2 node2.example.com:i8020 dfs.namenode.http-address.mycluster.nn1 node1.example.com:50070 dfs.namenode.http-address.mycluster.nn2 node2.example.com:50070 dfs.namenode.shared.edits.dir qjournal://node2:8485;node3:8485;node4:8485/mycluster dfs.client.failover.proxy.provider.mycluster org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider dfs.ha.fencing.methods sshfence dfs.ha.fencing.ssh.private-key-files /home/hadoop/.ssh/id_dsa dfs.journalnode.edits.dir /opt/jn/data dfs.ha.automatic-failover.enabled true

3.配置core-site.xml

fs.defaultFS hdfs://mycluster ha.zookeeper.quorum node1:2181,node2:2181,node3:2181 hadoop.tmp.dir /opt/hadoop

4.配置zk

initLimit=10syncLimit=5dataDir=/opt/zookeeperclientPort=2181server.1=node1:2888:3888server.2=node2:2888:3888server.3=node3:2888:3888

在配置的dataDir目录下创建myid文件,在node1上配置,稍后还要在node2,node3做相应配置

[hadoop@node1 zookeeper]$ cat /opt/zookeeper/myid1

将node1上的zookeeper目录拷贝的node2,及node3上

[root@node1 opt]# scp -r zookeeper/ root@node2:/opt/[root@node1 opt]# scp -r zookeeper/ root@node3:/opt/

并在node2及node3上分别修改myid文件,node2修改为2,node3修改为3

将node1上的zk的目录拷贝到node2,node3上

[hadoop@node1 software]$ scp -r zookeeper-3.4.9 hadoop@node2:/home/hadoop/software/[hadoop@node1 software]$ scp -r zookeeper-3.4.9 hadoop@node3:/home/hadoop/software/

配置3台节点的ZK_HOME的环境变量:

[root@node1 bin]# vim /etc/profileexport ZK_HOME=/home/hadoop/software/zookeeper-3.4.9export PATH=$PATH:$ZK_HOME/bin

关闭防火墙,并在3台机器上分别启动zk

# zkServer.sh startZooKeeper JMX enabled by defaultUsing config: /home/hadoop/software/zookeeper-3.4.9/bin/../conf/zoo.cfgStarting zookeeper ... STARTED

# jps2791 Jps2773 QuorumPeerMain

5.配置slaves

[root@node1 hadoop]# vim slaves node2node3node4

6,将配置好的hadoop目录拷贝到其他节点

[hadoop@node1 hadoop]$ scp * hadoop@node2:/home/hadoop/software/hadoop-2.5.2/etc/hadoop/[hadoop@node1 hadoop]$ scp * hadoop@node3:/home/hadoop/software/hadoop-2.5.2/etc/hadoop/[hadoop@node1 hadoop]$ scp * hadoop@node4:/home/hadoop/software/hadoop-2.5.2/etc/hadoop/

7. 启动JournalNode

分别在node2,node3,node4机器上上启动JournalNode

[hadoop@node2 sbin]$ ./hadoop-daemon.sh start journalnodestarting journalnode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-journalnode-node2.out[hadoop@node2 sbin]$ jps2581 JournalNode2627 Jps2378 QuorumPeerMain[hadoop@node2 sbin]$

8.在其中一台含有Namenode的机器上进行格式化

[hadoop@node1 bin]$ ./hdfs namenode -format

9.将刚才格式化好的元数据文件拷贝到其他的namenode节点上

9.1 先启动刚才格式化后的Namenode节点(只启动NameNode)

[hadoop@node1 sbin]$ ./hadoop-daemon.sh start namenode

[hadoop@node1 sbin]$ jps2315 QuorumPeerMain2790 NameNode2859 Jps

9.2 再在未格式化的节点上执行以下命令:

[hadoop@node2 bin]$ ./hdfs namenode -bootstrapStandby

检查是否有相应目录生成

10.先停止所有的服务,除了ZK

[hadoop@node1 sbin]$ ./stop-dfs.sh Stopping namenodes on [node1 node2]node2: no namenode to stopnode1: stopping namenodenode2: no datanode to stopnode4: no datanode to stopnode3: no datanode to stopStopping journal nodes [node2 node3 node4]node3: stopping journalnodenode2: stopping journalnodenode4: stopping journalnodeStopping ZK Failover Controllers on NN hosts [node1 node2]node2: no zkfc to stopnode1: no zkfc to stop[hadoop@node1 sbin]$

11.格式化zkfc ,在任意一台有Namenode机器上进行格式化

[hadoop@node1 bin]$ ./hdfs zkfc -formatZK

12.启动hdfs

[hadoop@node1 sbin]$ ./start-dfs.sh Starting namenodes on [node1 node2]node1: starting namenode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-namenode-node1.outnode2: starting namenode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-namenode-node2.outnode4: starting datanode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-datanode-node4.outnode2: starting datanode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-datanode-node2.outnode3: starting datanode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-datanode-node3.outStarting journal nodes [node2 node3 node4]node3: starting journalnode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-journalnode-node3.outnode2: starting journalnode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-journalnode-node2.outnode4: starting journalnode, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-journalnode-node4.outStarting ZK Failover Controllers on NN hosts [node1 node2]node1: starting zkfc, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-zkfc-node1.outnode2: starting zkfc, logging to /home/hadoop/software/hadoop-2.5.2/logs/hadoop-hadoop-zkfc-node2.out[hadoop@node1 sbin]$ jps3662 Jps2315 QuorumPeerMain3345 NameNode3616 DFSZKFailoverController[hadoop@node1 sbin]$

通过jps查看需要的节点是否启动成功

[hadoop@node2 opt]$ jps3131 JournalNode3019 DataNode3217 DFSZKFailoverController2955 NameNode3276 Jps2378 QuorumPeerMain[hadoop@node2 opt]$

[hadoop@node3 opt]$ jps66237 JournalNode2340 QuorumPeerMain66148 DataNode66294 Jps[hadoop@node3 opt]$

[hadoop@node4 sbin]$ jps2762 Jps2618 DataNode2706 JournalNode[hadoop@node4 sbin]$

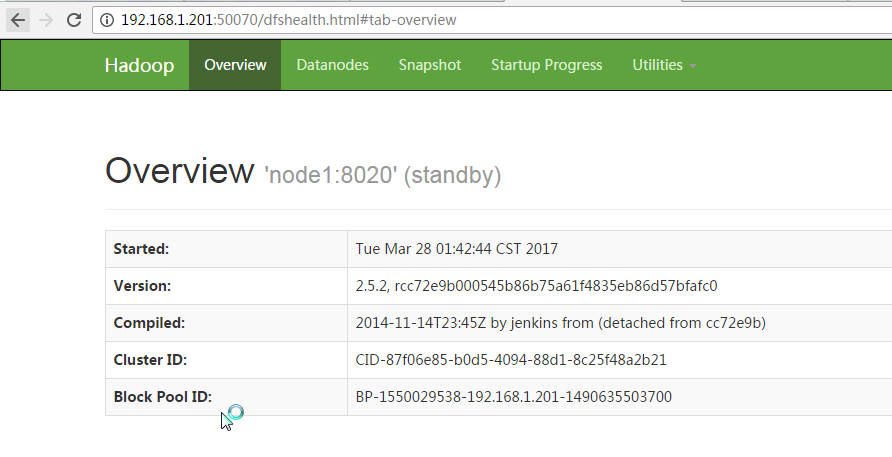

13,通过浏览器访问

哪个节点为 Standby,哪个为active是通过CPU竞争机制。

测试创建目录和上传文件:

[hadoop@node1 bin]$ ./hdfs dfs -mkdir -p /usr/file[hadoop@node1 bin]$ ./hdfs dfs -put /home/hadoop/software/jdk-7u75-linux-x64.rpm /usr/file/

到此 Hadoop2.x HA 就搭建完成。