本文共 11193 字,大约阅读时间需要 37 分钟。

模型训练

2021/3/10:使用训练好的Bert/Albert-CRF模型,同时,在此基础上,加一层BiLSTM网络,得修改后的Albert-BiLSTM-CRF模型(见下一篇文章),开始训练。

修改思路:以已有的Albert+CRF模型代码为基础,参考网上的Albert+BiLSTM+CRF模型,稍加修改即可。值得注意的,无非是“三种模型”之间的数据传递类型,比如,将Albert模型训练得到的embedding,传入BiLSTM(参考:)。

调试过程:其间,多次用到命令行,安装需要的库、工具包,按部就班去做即可。

import numpy as npfrom bert4keras.backend import keras, Kfrom bert4keras.models import build_transformer_modelfrom bert4keras.tokenizers import Tokenizerfrom bert4keras.optimizers import Adamfrom bert4keras.snippets import sequence_padding, DataGeneratorfrom bert4keras.snippets import open, ViterbiDecoderfrom bert4keras.layers import ConditionalRandomFieldfrom keras.layers import Densefrom keras.models import Modelfrom tqdm import tqdmfrom tensorflow import ConfigProtofrom tensorflow import InteractiveSessionfrom numpy import arrayfrom keras.models import Sequentialfrom keras.layers import LSTMfrom keras.layers import Densefrom keras.layers import Bidirectionalfrom keras.layers import Dropoutfrom keras.layers import TimeDistributedfrom keras_contrib.layers import CRFfrom keras_contrib.losses import crf_lossfrom keras_contrib.metrics import crf_accuracy, crf_viterbi_accuracyconfig = ConfigProto()# config.gpu_options.per_process_gpu_memory_fraction = 0.2config.gpu_options.allow_growth = Truesession = InteractiveSession(config=config)maxlen = 256epochs = 1#10batch_size = 16bert_layers = 12learing_rate = 1e-5 # bert_layers越小,学习率应该要越大crf_lr_multiplier = 10 # 必要时扩大CRF层的学习率#1000# # bert配置# config_path = './bert_model/chinese_L-12_H-768_A-12/bert_config.json'# checkpoint_path = './bert_model/chinese_L-12_H-768_A-12/bert_model.ckpt'# dict_path = './bert_model/chinese_L-12_H-768_A-12/vocab.txt'#albert配置config_path = './bert_model/albert_large/albert_config.json'checkpoint_path = './bert_model/albert_large/model.ckpt-best'dict_path = './bert_model/albert_large/vocab_chinese.txt'def load_data(filename): D = [] with open(filename, encoding='utf-8') as f: f = f.read() for l in f.split('\n\n'): if not l: continue d, last_flag = [], '' for c in l.split('\n'): char, this_flag = c.split(' ') if this_flag == 'O' and last_flag == 'O': d[-1][0] += char elif this_flag == 'O' and last_flag != 'O': d.append([char, 'O']) elif this_flag[:1] == 'B': d.append([char, this_flag[2:]]) else: d[-1][0] += char last_flag = this_flag D.append(d) return D# 标注数据train_data = load_data('./data/example.train')valid_data = load_data('./data/example.dev')test_data = load_data('./data/example.test')# 建立分词器tokenizer = Tokenizer(dict_path, do_lower_case=True)# 类别映射labels = ['PER', 'LOC', 'ORG']id2label = dict(enumerate(labels))label2id = {j: i for i, j in id2label.items()}num_labels = len(labels) * 2 + 1class data_generator(DataGenerator): """数据生成器 """ def __iter__(self, random=False): batch_token_ids, batch_segment_ids, batch_labels = [], [], [] for is_end, item in self.sample(random): token_ids, labels = [tokenizer._token_start_id], [0] for w, l in item: w_token_ids = tokenizer.encode(w)[0][1:-1] if len(token_ids) + len(w_token_ids) < maxlen: token_ids += w_token_ids if l == 'O': labels += [0] * len(w_token_ids) else: B = label2id[l] * 2 + 1 I = label2id[l] * 2 + 2 labels += ([B] + [I] * (len(w_token_ids) - 1)) else: break token_ids += [tokenizer._token_end_id] labels += [0] segment_ids = [0] * len(token_ids) batch_token_ids.append(token_ids) batch_segment_ids.append(segment_ids) batch_labels.append(labels) if len(batch_token_ids) == self.batch_size or is_end: batch_token_ids = sequence_padding(batch_token_ids) batch_segment_ids = sequence_padding(batch_segment_ids) batch_labels = sequence_padding(batch_labels) yield [batch_token_ids, batch_segment_ids], batch_labels batch_token_ids, batch_segment_ids, batch_labels = [], [], []"""后面的代码使用的是bert类型的模型,如果你用的是albert,那么前几行请改为:"""model = build_transformer_model( config_path, checkpoint_path, model='albert',)output_layer = 'Transformer-FeedForward-Norm'albert_output = model.get_layer(output_layer).get_output_at(bert_layers - 1)lstm = Bidirectional(LSTM(units=128, return_sequences=True), name="bi_lstm")(albert_output)drop = Dropout(0.1, name="dropout")(lstm)dense = TimeDistributed(Dense(num_labels, activation="softmax"), name="time_distributed")(drop)output = Dense(num_labels)(dense)CRF = ConditionalRandomField(lr_multiplier=crf_lr_multiplier)output = CRF(output)model = Model(model.input, output)model.summary()model.compile( loss=CRF.sparse_loss, optimizer=Adam(learing_rate), metrics=[CRF.sparse_accuracy])class NamedEntityRecognizer(ViterbiDecoder): """命名实体识别器 """ def recognize(self,text): tokens = tokenizer.tokenize(text) while len(tokens) > 512: tokens.pop(-2) mapping = tokenizer.rematch(text, tokens) token_ids = tokenizer.tokens_to_ids(tokens) segment_ids = [0] * len(token_ids) nodes = model.predict([[token_ids], [segment_ids]])[0] labels = self.decode(nodes) entities, starting = [], False for i, label in enumerate(labels): if label > 0: if label % 2 == 1: starting = True entities.append([[i], id2label[(label - 1) // 2]]) elif starting: entities[-1][0].append(i) else: starting = False else: starting = False return [(text[mapping[w[0]][0]:mapping[w[-1]][-1] + 1], l) for w, l in entities]def evaluate(data): """评测函数 """ X, Y, Z = 1e-10, 1e-10, 1e-10 for d in tqdm(data): text = ''.join([i[0] for i in d]) R = set(NER.recognize(text)) T = set([tuple(i) for i in d if i[1] != 'O']) X += len(R & T) Y += len(R) Z += len(T) f1, precision, recall = 2 * X / (Y + Z), X / Y, X / Z return f1, precision, recallclass Evaluate(keras.callbacks.Callback): def __init__(self): self.best_val_f1 = 0 def on_epoch_end(self, epoch, logs=None): trans = K.eval(CRF.trans) NER.trans = trans print(NER.trans) f1, precision, recall = evaluate(valid_data) # 保存最优 if f1 >= self.best_val_f1: self.best_val_f1 = f1 model.save_weights('best_model.weights') print( 'valid: f1: %.5f, precision: %.5f, recall: %.5f, best f1: %.5f\n' % (f1, precision, recall, self.best_val_f1) ) f1, precision, recall = evaluate(test_data) print( 'test: f1: %.5f, precision: %.5f, recall: %.5f\n' % (f1, precision, recall) )if __name__ == '__main__': evaluator = Evaluate() train_generator = data_generator(train_data, batch_size) model.fit_generator( train_generator.forfit(), steps_per_epoch=len(train_generator), epochs=epochs, callbacks=[evaluator] )else: model.load_weights('best_model.weights') 模型评估

2021/3/11:今早,查看Albert+BiLSTM+CRF模型运行结果,发现其精度很低,仅为0.8左右。然而,使用同样的数据,Albert+CRF模型精度在0.95以上。→→→思考其中原因,尝试调整代码:①尝试调整LSTM相关参数(dropout),甚至去除dropout,皆无改善。②尝试去除dropout与。dropout的作用?防止模型过拟合,但我认为,其使用需要看场景,参考:最后dense层的作用?我认为,可以将其理解为分类输出层,因此模型中有CRF用于输出转换,故可能不需要dens层。参考:→→→去除下面代码后两行后,Albert+BiLSTM+CRF模型精度在0.95以上。至于模型原理,待深究。

lstm = Bidirectional(LSTM(units=128, return_sequences=True), name="bi_lstm")(albert_output)#drop = Dropout(0.2, name="dropout")(lstm)#dense = TimeDistributed(Dense(num_labels, activation="softmax"), name="time_distributed")(drop)

读写文件

2021/3/12:上午,一直在尝试Python读写文件,如此简单之事,竟耗费我两小时之久。原因:总是报错'open' object has no attribute 'readlines'。解决思路:新建一个py文件,在里面进行读写操作,可行。然而,同样的语句,在Albert+BiLSTM+CRF模型py文件中,不可行。→这说明,语句本身没错,可能是Albert+BiLSTM+CRF模型py文件中变量/函数等名称与读写语句冲突。→的确如此,Albert+BiLSTM+CRF模型py文件的开头,有“from bert4keras.snippets import open, ViterbiDecoder”,此"open"非彼"open"。

model.load_weights('best_model.weights')NER = NamedEntityRecognizer(trans=K.eval(CRF.trans), starts=[0], ends=[0])r = open("D:\Asian elephant\gao A_geography_NER\A_geography_NER\data\\result.txt", 'w')with open("D:\Asian elephant\gao A_geography_NER\A_geography_NER\data\\t.txt",'r',encoding='utf-8') as tt: content = tt.readlines()for line in content: ner=NER.recognize(line) print(ner,file=r)

模型训练

2021/3/14:训练模型(迭代3次,学习率设为1000,其他参数设置如下)。

训练数据:现有标注数据集+自己标注的数据;测试数据:自己标注的数据;验证数据:自己标注的数据。

耗时:纯CPU,迭代一次大约需要7小时。

结果LOW:epoch 1 →1304/1304:loss: 3.9929 - sparse_accuracy: 0.9648,test: f1: 0.13333, precision: 0.41176, recall: 0.07955,valid: f1: 0.15493, precision: 0.64706, recall: 0.08800, best f1: 0.15493

epoch 2→1304/1304:loss: 0.5454 - sparse_accuracy: 0.9849,test: f1: 0.25455, precision: 0.63636, recall: 0.15909,valid: f1: 0.18919, precision: 0.60870, recall: 0.11200, best f1: 0.18919

epoch 3→test与valid的precision达0.7以上

maxlen = 256 #文本保留的最大长度epochs = 3 #迭代次数batch_size = 16 #训练时,每次传入模型的特征数量bert_layers = 12learing_rate = 1e-5 # bert_layers越小,学习率应该要越大crf_lr_multiplier = 1000 # 必要时扩大CRF层的学习率#1000

各种bug及其解决

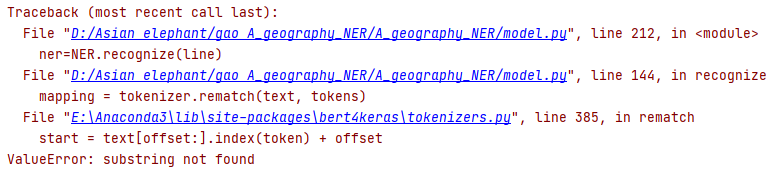

ValueError: substring not found

2021/3/5:问题:迭代三次的模型已训练完毕,但将所有数据放入模型时,得到上述bug。解决:解决bug并不难,甚至无需了解其原理,只需进行比对——多试几次,发现数据中报错行的规律。本以为是标点符号的问题,但排查过后,了解到,是字母的问题。

ValueError: not enough values to unpack (expected 2, got 1)

2021/3/13:问题:其他设置一致,仅是使用的数据不同,精度结果却大相径庭。使用Albert+BiLSTM+CRF模型代码包自带的训练数据用于训练模型,使用自己标注的少量数据用于测试与验证,得到较好的结果;但在训练数据中,加上自己标注的少量数据,一起用于训练,却得到很差的结果。解决:仍是,找不同。我标注的数据与原数据有何不同?答:是否有'\n',这“不起眼”的'\n',却有很重要的作用(如下)。

2021/3/15:新增一些自己标注的数据,而后,程序又报错。错误原因:类似于2021/3/13那次报错原因,仍是数据里的格式问题(字符/空格/换行符多余或缺失),但本次错误更为细致——文件末尾两个换行符的缺失,而这两个换行符十分重要(见代码中的for l in f.split('\n\n'): #查找双换行符)。解决方案:仍是对比正确数据VS我的报错数据,①以为是数据中空格的问题(上次是此原因报错),就一直纠结空格;②对比的所谓“正确数据”并非原始的、真正正确的数据,导致迟迟未能解决。

def load_data(filename): #加载标注数据:训练集、测试集与验证集 D = [] with open(filename, encoding='utf-8') as f: #打开并读取文件内容 f = f.read() #读取文件全部内容 for l in f.split('\n\n'): #查找双换行符 if not l: #若无双换行符 continue #跳出本次循环,可执行下一次 (而break是跳出整个循环) d, last_flag = [], '' for c in l.split('\n'): #查找换行符 char, this_flag = c.split(' ') if this_flag == 'O' and last_flag == 'O': d[-1][0] += char elif this_flag == 'O' and last_flag != 'O': d.append([char, 'O']) elif this_flag[:1] == 'B': #从索引0开始取,到1,但不包括1(即标注首字母为B) d.append([char, this_flag[2:]]) #从索引2开始取,char竖着到最后,如“梁子老寨”每个字的标注都非O,输出('梁子老寨', 'LOC')。 else: d[-1][0] += char #若无换行符, last_flag = this_flag D.append(d) return D#结果格式:[('良子', 'LOC'), ('勐乃通达', 'LOC'), ('梁子老寨', 'LOC'), ('黑山', 'LOC'), ('黑山', 'LOC'), ('勐乃通达', 'LOC')]

转载地址:https://blog.csdn.net/YWP_2016/article/details/114648476 如侵犯您的版权,请留言回复原文章的地址,我们会给您删除此文章,给您带来不便请您谅解!

发表评论

最新留言

关于作者