全国省市县数据爬虫

发布日期:2021-06-29 11:49:51

浏览次数:2

分类:技术文章

本文共 4451 字,大约阅读时间需要 14 分钟。

项目需要全国省市县数据,网上找了一圈发现要么过时要么收费,于是花点时间自己写了个爬虫爬了些基础数据,基本上够用了,数据是从爬来的,目前更新到2019年,代码如下:

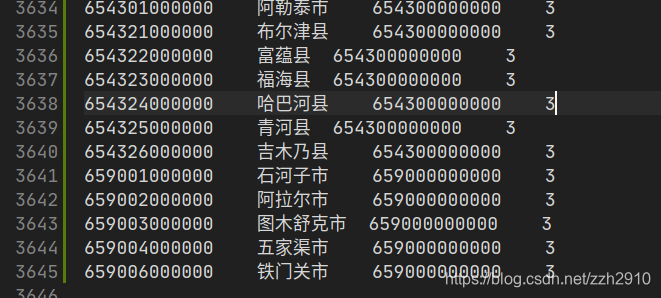

import requestsfrom requests.adapters import HTTPAdapterfrom bs4 import BeautifulSoupimport reimport timeheaders = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) ' 'AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/67.0.3396.99 Safari/537.36'}def get_page(url): try: s = requests.Session() s.mount('http://', HTTPAdapter(max_retries=2)) # 重试次数 r = s.get(url, headers=headers, timeout=3) r.raise_for_status() r.encoding = r.apparent_encoding return r.text except Exception as e: print(e) return ''# 解析省级数据,返回(省份链接,id, 名字)def parse_province(page): provinces = [] id_pattern = r'(.*).html' # 用于提取链接中的id信息 soup = BeautifulSoup(page, 'lxml') province_trs = soup.find_all(class_='provincetr') # 有些空标签,所以需要很多判断 for tr in province_trs: if tr: province_items = tr.find_all(name='td') for item in province_items: if item: a = item.find(name='a') if a: next_url = a.get('href') id_ = re.search(id_pattern, next_url).group(1) id_ += '0' * (12 - len(id_)) # 省份id只给了前几位,补全到12位 name = a.text provinces.append((next_url, id_, name)) return provinces# 解析市级数据,返回(市级链接,id, 名字)def parse_city(page): citys = [] soup = BeautifulSoup(page, 'lxml') city_trs = soup.find_all(class_='citytr') for tr in city_trs: if tr: tds = tr.find_all(name='td') next_url = tds[0].find(name='a').get('href') id_ = tds[0].text name = tds[1].text citys.append((next_url, id_, name)) return citys# 解析区县数据,由于不需要下一级数据,并且保持格式统一,返回(None, id, 名字)def parse_district(page): districts = [] soup = BeautifulSoup(page, 'lxml') district_trs = soup.find_all(class_='countytr') for tr in district_trs: if tr: tds = tr.find_all(name='td') id_ = tds[0].text name = tds[1].text districts.append((None, id_, name)) return districts# 写入一串记录到指定文件def write_lines(file, type, data_list, parent_id, level): with open(file, type, encoding='utf-8') as f: for data in data_list: f.write(data[1] + '\t' + data[2] + '\t' + parent_id + '\t' + level + '\n')if __name__ == "__main__": root = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2019/' province_list = [] city_list = [] # 爬取省份数据 province_page = get_page(root) if province_page: province_list = parse_province(province_page) if province_list: write_lines('areas.txt', 'w', province_list, '0', '1') print('='*20) print('省份数据写入完成') print('='*20) else: print('省份列表为空') else: print('省份页面获取失败') # 爬取市级数据 if province_list: for province in province_list: province_url = root + province[0] province_id = province[1] province_name = province[2] city_page = get_page(province_url) if city_page: city_list_tmp = parse_city(city_page) if city_list_tmp: city_list += city_list_tmp write_lines('areas.txt', 'a', city_list_tmp, province_id, '2') print(province_name+'市级数据写入完成') time.sleep(1) else: print(province_name+'市级列表为空') else: print(province_name+'市级页面获取失败') print('='*20) print('市级数据写入完成') print('='*20) # 爬取区县数据 if city_list: for city in city_list: city_url = root + city[0] city_id = city[1] city_name = city[2] district_page = get_page(city_url) if district_page: district_list = parse_district(district_page) if district_list: write_lines('areas.txt', 'a', district_list, city_id, '3') print(city_name+'区县数据写入完成') time.sleep(1) else: print(city_name+'区县列表为空') else: print(city[2]+'区县页面获取失败') print('='*20) print('区县数据写入完成') print('='*20) 最终数据格式为 (id,名字,上级id,级别(1,2,3分别代表省市县)) :

共有3600多条数据

转载地址:https://blog.csdn.net/zzh2910/article/details/104619629 如侵犯您的版权,请留言回复原文章的地址,我们会给您删除此文章,给您带来不便请您谅解!

发表评论

最新留言

做的很好,不错不错

[***.243.131.199]2024年04月22日 00时59分52秒

关于作者

喝酒易醉,品茶养心,人生如梦,品茶悟道,何以解忧?唯有杜康!

-- 愿君每日到此一游!

推荐文章

非递减数列

2019-04-29

AUC粗浅理解笔记记录

2019-04-29

分治法:241. 为运算表达式设计优先级

2019-04-29

广度优先遍历:二进制矩阵中的最短路径

2019-04-29

广度优先遍历:set集合的速度远远比list快:完全平方数

2019-04-29

广度+深度:岛屿的最大面积/岛屿数量

2019-04-29

torch 模型运行时间与forward没对应的可能原因

2019-04-29

130. 被围绕的区域

2019-04-29

欧式距离、余弦相似度和余弦距离

2019-04-29

transform 等效转换(参考源码)

2019-04-29

cv2 PIL区别笔记

2019-04-29

C#中的委托

2019-04-29

引用类型和值类型

2019-04-29

一个合格程序员该做的事情——你做好了吗?

2019-04-29

再谈如何表现已点击的链接2

2019-04-29

多线程消息队列 (MSMQ) 触发器

2019-04-29

WCF开发简简单单的六个步骤

2019-04-29

4.23数学作业答案

2019-04-29

4月24语文作业答案

2019-04-29

4.26数学作业

2019-04-29