hadoop-HDFS文件java操作

发布日期:2021-06-29 12:30:24

浏览次数:2

分类:技术文章

本文共 3355 字,大约阅读时间需要 11 分钟。

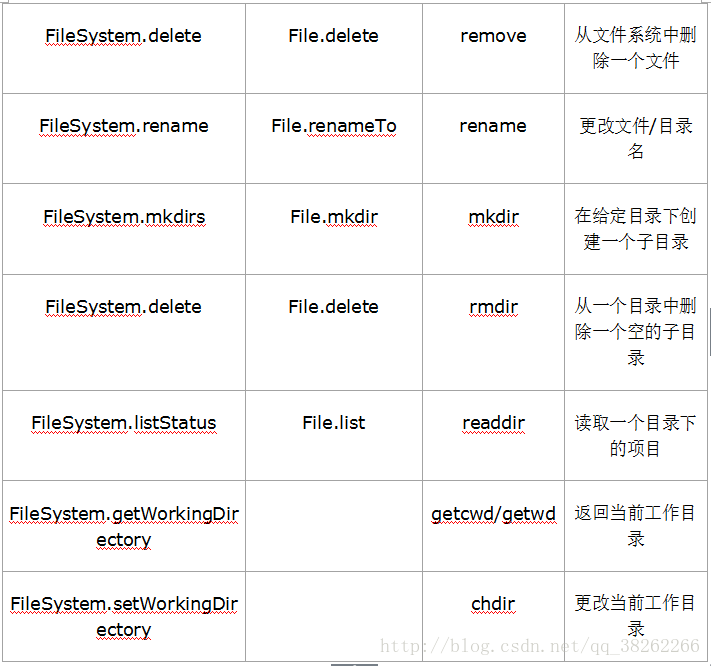

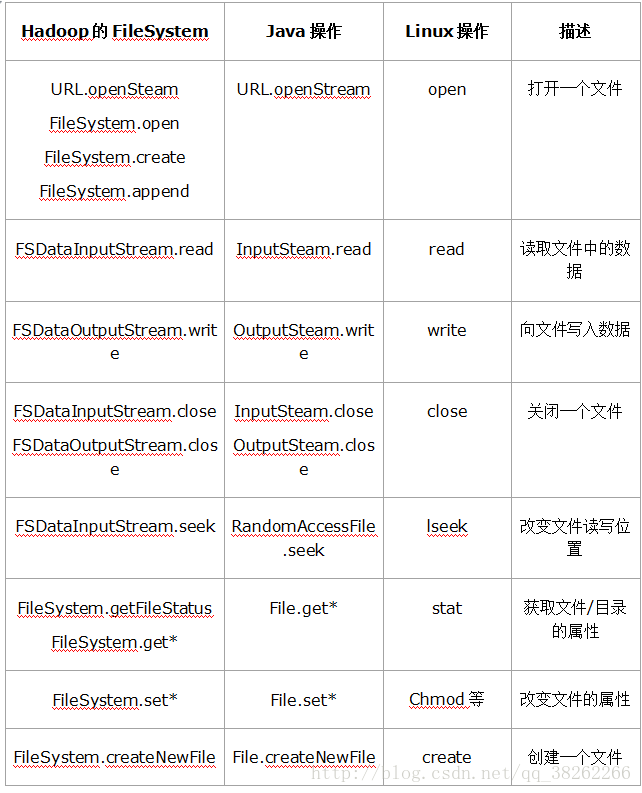

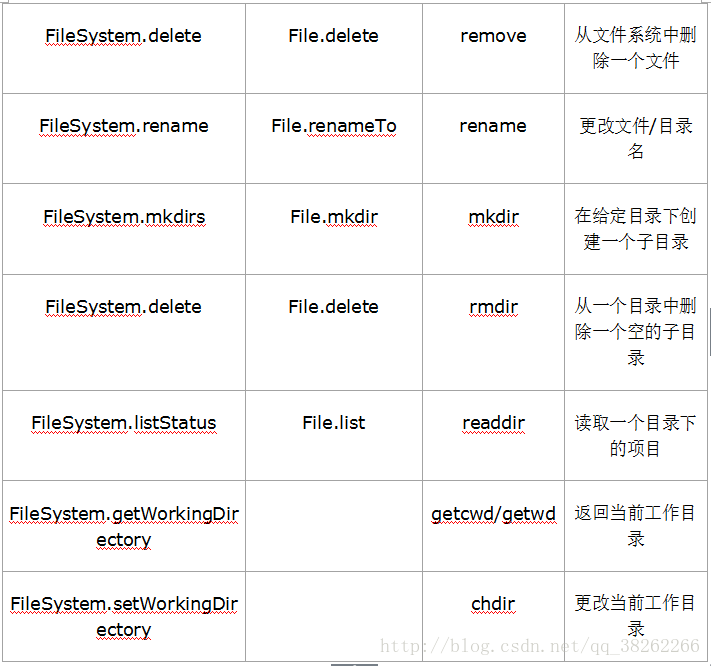

基本实例化代码 System.setProperty("hadoop.home.dir", "/home/xm/hadoop-2.7.1"); String uri = "hdfs://master:9000"; Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(URI.create(uri),conf); Path path = new Path("hdfs://master:9000/input/input3.txt"); 创建目录 Path path = new Path("hdfs://master:9000/input"); fs.mkdirs(path); 创建文件 Path path = new Path("hdfs://master:9000/input/input3.txt"); fs.createNewFile(path); 删除目录或者文件,路径修改即可 Path path = new Path("hdfs://master:9000/input/input3.txt"); fs.delete(path); 文件写入代码 Path path = new Path("hdfs://master:9000/input/input3.txt"); FSDataOutputStream out = fs.create(path); out.writeUTF("hello hadoop!"); 文件读出代码方法1(配合上面写入使用,否则可能出错) Path path = new Path("hdfs://master:9000/input/input3.txt"); FSDataInputStream inStream = fs.open(path); String data = inStream.readUTF(); System.out.println(data); inStream.close(); 文件读出方法2--万能代码(不乱码) Path path = new Path("hdfs://master:9000/input/input3.txt"); InputStream in = null; in = fs.open(path); IOUtils.copyBytes(in, System.out, 4096); IOUtils.closeStream(in); 本地文件内容导入HDFS文件代码 Path path = new Path("hdfs://master:9000/input/input3.txt"); String p = "/home/xm/aaaa"; InputStream in = new BufferedInputStream(new FileInputStream(p)); OutputStream out = null; out = fs.create(path); IOUtils.copyBytes(in, out, 4096,true); IOUtils.closeStream(in); IOUtils.closeStream(out); 本地文件追加至HDFS文件 Path path = new Path("hdfs://master:9000/input/input3.txt"); String p = "/home/xm/aaaa"; InputStream in = new BufferedInputStream(new FileInputStream(p)); OutputStream out = null; out = fs.append(path); IOUtils.copyBytes(in, out, 4096, true); IOUtils.closeStream(in); IOUtils.closeStream(out);追加若出错加上 conf.setBoolean( "dfs.support.append", true ); conf.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER"); conf.set("dfs.client.block.write.replace-datanode-on-failure.enable", "true"); 自行追加(若出错希望知道的同学告诉改错方法写在下面) FSDataOutputStream out = fs.append(path); int readLen = "end hadoop!".getBytes().length; while(-1!=readLen){ out.write("end hadoop".getBytes(),0,readLen); break; } out.close(); 本地文件导入到HDFS目录中 String srcFile = "/home/xm/aaaa"; Path srcPath = new Path(srcFile); String dstFile = "hdfs://master:9000/input/"; Path dstPath = new Path(dstFile); fs.copyFromLocalFile(srcPath,dstPath);

获取给定目录下的所有子目录以及子文件 getFile(path,fs); public static void getFile(Path path,FileSystem fs) throws IOException, IOException{ FileStatus[] fileStatus = fs.listStatus(path); for(int i=0;i 查找某个文件在HDFS集群的位置 Path path = new Path("hdfs://master:9000/input/input3.txt"); FileStatus status = fs.getFileStatus(path); BlockLocation[]locations = fs.getFileBlockLocations(status, 0, status.getLen()); int length = locations.length; for(int i=0;i HDFS集群上所有节点名称信息 DistributedFileSystem dfs = (DistributedFileSystem) fs; DatanodeInfo[]dataNodeStats = dfs.getDataNodeStats(); for(int i=0;i

转载地址:https://bupt-xbz.blog.csdn.net/article/details/79165000 如侵犯您的版权,请留言回复原文章的地址,我们会给您删除此文章,给您带来不便请您谅解!

发表评论

最新留言

能坚持,总会有不一样的收获!

[***.219.124.196]2024年04月13日 21时22分54秒

关于作者

喝酒易醉,品茶养心,人生如梦,品茶悟道,何以解忧?唯有杜康!

-- 愿君每日到此一游!

推荐文章

2020年电赛题目,命题专家们怎么看?

2019-04-29

PCB元器件摆放不可忽略的10个技巧

2019-04-29

掌握AI核心技术没有秘籍,能自己创造就是王道

2019-04-29

大学老师的月薪多少?实话实说:4万多一点……

2019-04-29

2020年电赛题目,命题专家权威解析!

2019-04-29

如何掌握“所有”的程序语言?没错,就是所有!

2019-04-29

39岁单身程序员入住养老院

2019-04-29

写论文,这个神器不能少!

2019-04-29

我在哥大读博的五年,万字总结

2019-04-29

本科、硕士、博士,究竟有何区别?

2019-04-29

如果我的实验室也这样布置,那多好。

2019-04-29

现在做硬件工程师还有前途吗?

2019-04-29

用 50 种编程语言写“Hello,World!”

2019-04-29

GD32替换STM32,这些细节一定要知道。

2019-04-29

华为员工离职心声:菊厂15年退休,感恩,让我实现了财务自由!

2019-04-29

春晚上的“拓荒牛”

2019-04-29

嵌入式驱动自学者的亲身感受,有什么建议?

2019-04-29

华为被超越!这家公司成中国最大智能手机制造商,不是小米!

2019-04-29

腾讯机器狗,站起来了!

2019-04-29

我用自己创造的深度学习框架进入腾讯,爽!

2019-04-29