《利用Python 进行数据分析》 - 笔记(5)

发布日期:2021-06-30 19:50:06

浏览次数:2

分类:技术文章

本文共 20902 字,大约阅读时间需要 69 分钟。

问题导读:

1.合并数据集

2.重塑和轴向旋转

3.数据转换(待续)

解决方案:

合并数据集

(1)数据库风格的DataFrame合并

- pandas的merge 函数 将通过一个或多个键将行连接起来

- 如果没有指定列,merge 就会直接依据相同列名的那一列进行连接

In [3]: df1 = pd.DataFrame( ...: {'key':['b','b','a','c','a','a','b'], ...: 'data1':range(7)} ...: )In [4]: df1Out[4]: data1 key0 0 b1 1 b2 2 a3 3 c4 4 a5 5 a6 6 b[7 rows x 2 columns]In [5]: df2 = pd.DataFrame( ...: {'key':['a','b','d'], ...: 'data2':range(3)} ...: )In [6]: df2Out[6]: data2 key0 0 a1 1 b2 2 d[3 rows x 2 columns]In [7]: pd.merge(df1,df2)Out[7]: data1 key data20 0 b 11 1 b 12 6 b 13 2 a 04 4 a 05 5 a 0[6 rows x 3 columns]In [8]: pd.merge(df1,df2, on='key')Out[8]: data1 key data20 0 b 11 1 b 12 6 b 13 2 a 04 4 a 05 5 a 0[6 rows x 3 columns] - 如果两个对象的列名不同,也可以在指定之后,进行合并

In [10]: df3 = pd.DataFrame({'lkey':['b','b','a','c','a','a','b'], ....: 'data1':range(7)})In [11]: df4 = pd.DataFrame({'rkey':['a','b','d'],'data2':range(3)})In [12]: pd.merge(df3,df4,left_on='lkey',right_on='rkey')Out[12]: data1 lkey data2 rkey0 0 b 1 b1 1 b 1 b2 6 b 1 b3 2 a 0 a4 4 a 0 a5 5 a 0 a[6 rows x 4 columns] - merge 做的是交集,其他方式有“left”,“right”,“outer”

- outer 外连接,求取的是键的并集

In [13]: pd.merge(df1,df2,how='outer')Out[13]: data1 key data20 0 b 11 1 b 12 6 b 13 2 a 04 4 a 05 5 a 06 3 c NaN7 NaN d 2[8 rows x 3 columns]

- 多对多

In [15]: df1 = pd.DataFrame({'key':['b','b','a','c','a','b'], ....: 'data1':range(6)})In [16]: df2 = pd.DataFrame({'key':['a','b','a','b','d'], ....: 'data2':range(5)})In [17]: pd.merge(df1,df2,on='key', how = 'left')Out[17]: data1 key data20 0 b 11 0 b 32 1 b 13 1 b 34 5 b 15 5 b 36 2 a 07 2 a 28 4 a 09 4 a 210 3 c NaN[11 rows x 3 columns]In [18]: pd.merge(df1,df2,on='key', how = 'right')Out[18]: data1 key data20 0 b 11 1 b 12 5 b 13 0 b 34 1 b 35 5 b 36 2 a 07 4 a 08 2 a 29 4 a 210 NaN d 4[11 rows x 3 columns]In [19]: pd.merge(df1,df2,on='key',how='inner')Out[19]: data1 key data20 0 b 11 0 b 32 1 b 13 1 b 34 5 b 15 5 b 36 2 a 07 2 a 28 4 a 09 4 a 2[10 rows x 3 columns]In [21]: pd.merge(df1,df2,on='key',how='outer')Out[21]: data1 key data20 0 b 11 0 b 32 1 b 13 1 b 34 5 b 15 5 b 36 2 a 07 2 a 28 4 a 09 4 a 210 3 c NaN11 NaN d 4[12 rows x 3 columns] - 根据多个键进行合并,并传入一个由列名组成的列表即可

In [27]: left = pd.DataFrame({'key1':['foo','foo','bar'],'key2':['one','two','one'],'key3':[1,2,3]})In [28]: right = pd.DataFrame({'key1':['foo','foo','foo','bar'],'key2':['one','one','one','two'],'rval':[4,5,6,7]})In [29]: pd.merge(left,right,on=['key1','key2'],how='outer')Out[29]: key1 key2 key3 rval0 foo one 1 41 foo one 1 52 foo one 1 63 foo two 2 NaN4 bar one 3 NaN5 bar two NaN 7[6 rows x 4 columns] - merge 的suffixes选项,用于附加到左右两个Dataframe对象的重叠列名上的字符串

- 用来解决为合并的相同列名的区分

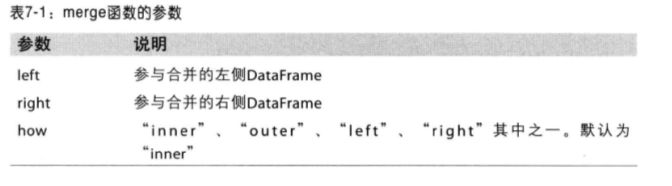

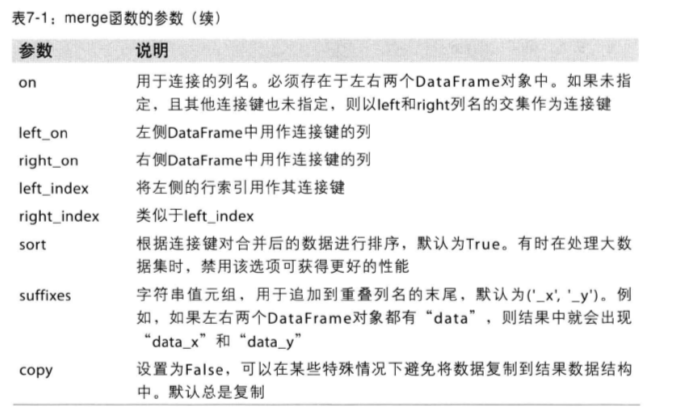

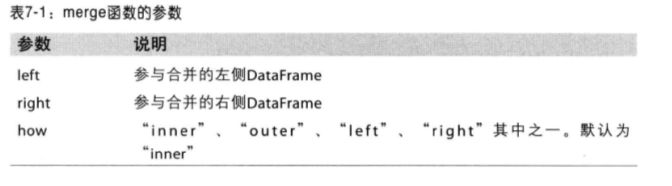

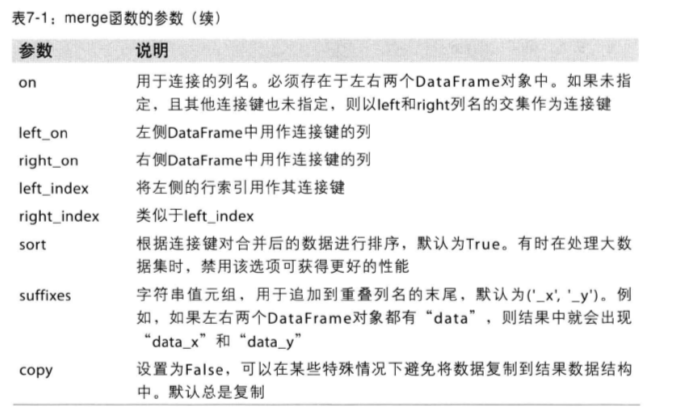

In [30]: pd.merge(left,right,on='key1')Out[30]: key1 key2_x key3 key2_y rval0 foo one 1 one 41 foo one 1 one 52 foo one 1 one 63 foo two 2 one 44 foo two 2 one 55 foo two 2 one 66 bar one 3 two 7[7 rows x 5 columns]In [31]: pd.merge(left,right,on='key1',suffixes=('_left','_right'))Out[31]: key1 key2_left key3 key2_right rval0 foo one 1 one 41 foo one 1 one 52 foo one 1 one 63 foo two 2 one 44 foo two 2 one 55 foo two 2 one 66 bar one 3 two 7[7 rows x 5 columns] - merge 函数的参数

(2)索引上的合并

- DataFrame 的索引也可以被用作连接键

- 传入 left_index = True / right_index = True

In [37]: left1 = pd.DataFrame({'key':['a','b','a','a','b','c'],'value':range(6)})In [38]: right1 = pd.DataFrame({'group_val':[3.5,7]},index = ['a','b'])In [39]: pd.merge(left1,right1,left_on='key',right_index=True)Out[39]: key value group_val0 a 0 3.52 a 2 3.53 a 3 3.51 b 1 7.04 b 4 7.0[5 rows x 3 columns]In [40]: right1Out[40]: group_vala 3.5b 7.0[2 rows x 1 columns]In [41]: left1Out[41]: key value0 a 01 b 12 a 23 a 34 b 45 c 5[6 rows x 2 columns] - 对于层次化的索引,我们要以列表的形式指明用作合并键的多个列

In [48]: lefth = pd.DataFrame({'key1':['Ohio','Ohio','Ohio','Nevada','Nevada'],'key2':[2000,2001,2002,2001,2002],'data':np.arange(5.)})In [49]: righth = pd.DataFrame(np.arange(12).reshape((6,2)),index = [['Nevada','Nevada','Ohio','Ohio','Ohio','Ohio'],[2001,2000,2000,2000,2001,2002]],columns = ['event1','event2'])In [50]: lefthOut[50]: data key1 key20 0 Ohio 20001 1 Ohio 20012 2 Ohio 20023 3 Nevada 20014 4 Nevada 2002[5 rows x 3 columns]In [52]: righthOut[52]: event1 event2Nevada 2001 0 1 2000 2 3Ohio 2000 4 5 2000 6 7 2001 8 9 2002 10 11[6 rows x 2 columns]In [53]: pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True)Out[53]: data key1 key2 event1 event20 0 Ohio 2000 4 50 0 Ohio 2000 6 71 1 Ohio 2001 8 92 2 Ohio 2002 10 113 3 Nevada 2001 0 1[5 rows x 5 columns]In [54]: pd.merge(lefth,righth,left_on=['key1','key2'],right_index=True,how='outer')Out[54]: data key1 key2 event1 event20 0 Ohio 2000 4 50 0 Ohio 2000 6 71 1 Ohio 2001 8 92 2 Ohio 2002 10 113 3 Nevada 2001 0 14 4 Nevada 2002 NaN NaN4 NaN Nevada 2000 2 3[7 rows x 5 columns] - 同时使用合并双方的索引

In [55]: left2 = pd.DataFrame([[1.,2.],[3.,4.],[5.,6.]], index = ['a','c','e'], ....: columns= ['Ohio','Nevada'])In [56]: right2 = pd.DataFrame([[7.,8.],[9.,10.],[11.,12],[13,14]], ....: index = ['b','c','d','e'],columns=['Missouri','Alabama'])In [57]: left2Out[57]: Ohio Nevadaa 1 2c 3 4e 5 6[3 rows x 2 columns]In [58]: right2Out[58]: Missouri Alabamab 7 8c 9 10d 11 12e 13 14[4 rows x 2 columns]In [59]: pd.merge(left2,right2,how='outer',left_index=True,right_index=True)Out[59]: Ohio Nevada Missouri Alabamaa 1 2 NaN NaNb NaN NaN 7 8c 3 4 9 10d NaN NaN 11 12e 5 6 13 14[5 rows x 4 columns]

- DataFrame有一个join 实例方法,他可以更为方便地实现按索引合并

- join 方法默认作的是左连接

- 该方法还可以使调用者的某个列跟参数DataFrame的索引进行连接

In [60]: left2.join(right2,how='outer')Out[60]: Ohio Nevada Missouri Alabamaa 1 2 NaN NaNb NaN NaN 7 8c 3 4 9 10d NaN NaN 11 12e 5 6 13 14[5 rows x 4 columns]In [61]: left2.join(right2)Out[61]: Ohio Nevada Missouri Alabamaa 1 2 NaN NaNc 3 4 9 10e 5 6 13 14[3 rows x 4 columns]In [62]: left1Out[62]: key value0 a 01 b 12 a 23 a 34 b 45 c 5[6 rows x 2 columns]In [63]: right1Out[63]: group_vala 3.5b 7.0[2 rows x 1 columns]In [64]: left1.join(right1,on='key')Out[64]: key value group_val0 a 0 3.51 b 1 7.02 a 2 3.53 a 3 3.54 b 4 7.05 c 5 NaN[6 rows x 3 columns]

- join 一组DataFrame(后面会了解 concat 函数)

In [65]: another = pd.DataFrame([[7.,8.],[9.,10.],[11.,12.],[16.,17.]], ....: index=['a','c','e','f'],columns=['New York','Oregon'])In [66]: left2.join([right2,another])Out[66]: Ohio Nevada Missouri Alabama New York Oregona 1 2 NaN NaN 7 8c 3 4 9 10 9 10e 5 6 13 14 11 12[3 rows x 6 columns]

(3)轴向连接

- 轴向连接实际上可以理解为数据的连接、绑定、或堆叠

# coding=utf-8import numpy as npimport pandas as pd"""numpy 的concatenate 函数:该函数用于合并原始的numpy 的数组"""arr = np.arange(12).reshape((3, 4))print arr'''[[ 0 1 2 3] [ 4 5 6 7] [ 8 9 10 11]]'''print np.concatenate([arr, arr], axis=1)'''[[ 0 1 2 3 0 1 2 3] [ 4 5 6 7 4 5 6 7] [ 8 9 10 11 8 9 10 11]]'''"""对于pandas 对象 Series 和 DataFrame 他们的带有标签的轴使你能够进一步推广数组的连接运算""""""(1) 各对象其他轴上的索引不同时,那些轴做的交集还是并集?答案:默认是并集"""# concat 默认在axis=0 上工作s1 = pd.Series([0,1], index=['a','b'])# s1 = pd.Series([1,2,2], index=['d','b','f'])s2 = pd.Series([2,3,4], index=['c','d','e'])s3 = pd.Series([5,6], index=['f','g'])print pd.concat([s1,s2,s3])'''a 0b 1c 2d 3e 4f 5g 6dtype: int64'''# 如果要求在axis=1 进行操作则结果变成一个DataFrameprint pd.concat([s1,s2,s3], axis=1)''' 0 1 2a 0 NaN NaNb 1 NaN NaNc NaN 2 NaNd NaN 3 NaNe NaN 4 NaNf NaN NaN 5g NaN NaN 6'''# 传入的如果是join=‘inner’ 即可得到他们的交集s4 = pd.concat([s1 * 5, s3])print pd.concat([s1,s4], axis=1)'''dtype: int64 0 1a 0 0b 1 5f NaN 5g NaN 6[4 rows x 2 columns]'''print pd.concat([s1,s4],axis=1,join='inner')''' 0 1a 0 0b 1 5[2 rows x 2 columns]'''# 指定轴进行连接操作,如果连接的两个Series 都没有该轴,则值为NaNprint pd.concat([s1,s4], axis=1, join_axes=[['a','c','b','e']])''' 0 1a 0 0c NaN NaNb 1 5e NaN NaN[4 rows x 2 columns]'''# 参与连接的片段的在结果中区分不开,这时候我们使用keys 参数建立一个层次化索引result = pd.concat([s1,s2,s3], keys=['one','two','three'])print result'''one a 0 b 1two c 2 d 3 e 4three f 5 g 6dtype: int64'''"""s1 = pd.Series([1,2,6], index=['a','b','f'])s2 = pd.Series([3,4], index=['c','d'])s3 = pd.Series([5,6], index=['e','f'])result = pd.concat([s1,s2,s3], keys=['one','two','three'])print result'''one a 1 b 2 f 6two c 3 d 4three e 5 f 6dtype: int64'''"""# print result.unstack()# 沿axis=1 对series 进行合并,则keys 就会成为DataFrame的列头print pd.concat([s1,s2,s3], axis=1, keys=['one','two','three'])print pd.concat([s1,s2,s3], axis=1)'''dtype: int64 one two threea 0 NaN NaNb 1 NaN NaNc NaN 2 NaNd NaN 3 NaNe NaN 4 NaNf NaN NaN 5g NaN NaN 6[7 rows x 3 columns] 0 1 2a 0 NaN NaNb 1 NaN NaNc NaN 2 NaNd NaN 3 NaNe NaN 4 NaNf NaN NaN 5g NaN NaN 6[7 rows x 3 columns]'''

- 以上对于Series 的逻辑对于DataFrame也一样

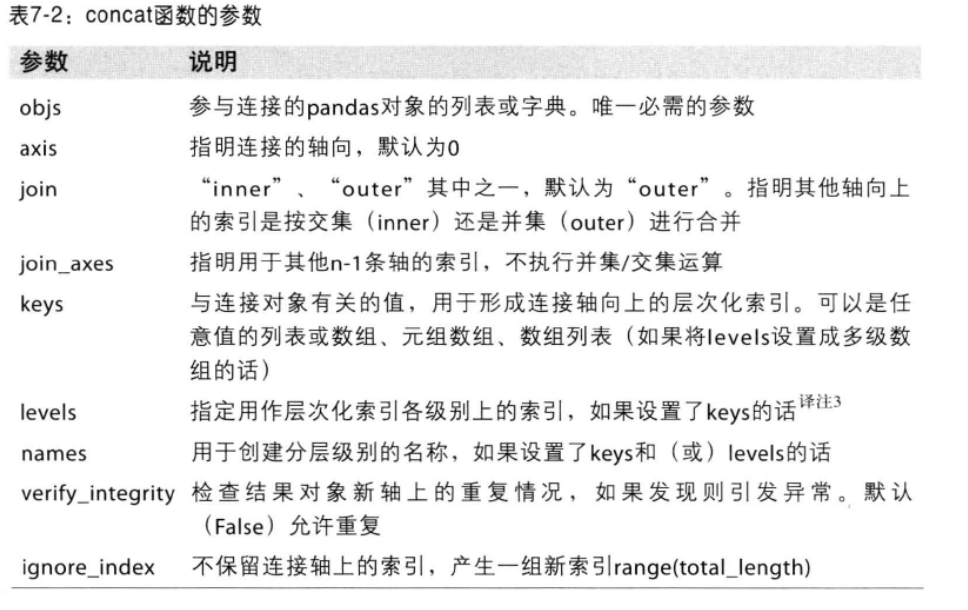

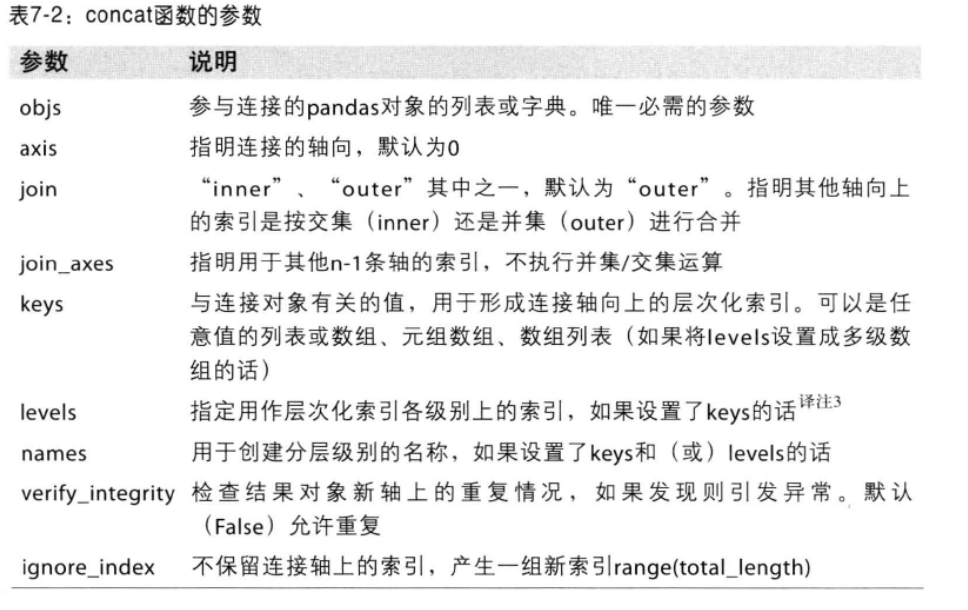

# coding=utf-8import pandas as pdimport numpy as npdf1 = pd.DataFrame(np.arange(6).reshape(3,2), index=['a','b','c'], columns=['one','two'])df2 = pd.DataFrame(5+np.arange(4).reshape(2,2), index=['a','c'], columns=['three','four'])print pd.concat([df1,df2],axis=1, keys=['level1','levle2'])''' level1 levle2 one two three foura 0 1 5 6b 2 3 NaN NaNc 4 5 7 8[3 rows x 4 columns]'''# 如果传入的不是列表而是一个字典,则字典的建就会被当做keys选项的值dic = {'level1':df1, 'level2':df2}print pd.concat(dic,axis=1)''' level1 level2 one two three foura 0 1 5 6b 2 3 NaN NaNc 4 5 7 8[3 rows x 4 columns]''' - concat 函数的参数列表

# coding=utf-8import pandas as pdimport numpy as npdf1 = pd.DataFrame(np.random.randn(3,4), columns=['a','b','c','d'])df2 = pd.DataFrame(np.random.randn(2,3), columns=['b','d','a'])print pd.concat([df1,df2])# 不保留连接轴的索引,创建新索引print pd.concat([df1,df2], ignore_index=True)print pd.concat([df1,df2], ignore_index=False)

(4)合并重叠数据

# coding=utf-8import pandas as pdimport numpy as npa = pd.Series([np.nan, 2.5, np.nan, 3.5, 4.5, np.nan], index=['f','e','d','c','b','a'])b = pd.Series(np.arange(len(a), dtype=np.float64), index=['f','e','d','c','b','a'])b[-1] = np.nanprint np.where(pd.isnull(a), b, a)print b[:-2].combine_first(a[2:])'''[ 0. 2.5 2. 3.5 4.5 nan]a NaNb 4.5c 3.0d 2.0e 1.0f 0.0dtype: float64'''# 对于DataFrame, combine_first 自然也是会在列上做同样的事情,我们可以把这个动作当成是参数对象数据对调用对象的数据打补丁df1 = pd.DataFrame({ 'a':[1.,np.nan, 5., np.nan], 'b':[np.nan, 2., np.nan, 6.], 'c':range(2,18,4)})df2 = pd.DataFrame({ 'a':[5., 4., np.nan, 3., 7.], 'b':[np.nan, 3., 4., 6., 8.]})print df1.combine_first(df2) 重塑和轴向旋转

(1)重塑层次化索引

# coding=utf-8import pandas as pdimport numpy as np"""层次化索引为DataFrame 数据的重排任务提供了一种具有良好一致性的方式(1) stack 将数据的列“旋转”为行(2) unstack 将数据的行“旋转”为列"""data = pd.DataFrame(np.arange(6).reshape((2,3)), index=pd.Index(['Ohio', 'Colorado'], name='state'), columns=pd.Index(['one','two','three'], name='number') )print data# 我们使用stack方法将DataFrame的列转换成行,得到一个Seriesresult = data.stack()print result# 同样使用unstack也可以将一个层次化索引的Series 转化得到一个DataFrameprint result.unstack()'''number one two threestateOhio 0 1 2Colorado 3 4 5[2 rows x 3 columns]state numberOhio one 0 two 1 three 2Colorado one 3 two 4 three 5dtype: int64number one two threestateOhio 0 1 2Colorado 3 4 5[2 rows x 3 columns]'''# unstack操作的是最内层的(stack也是)# 当我们传入的分层级别的编号或名称,同样可以对其他级别进行unstack 操作print result.unstack(0) == result.unstack('state')'''state Ohio Coloradonumberone True Truetwo True Truethree True True[3 rows x 2 columns]''' - 当不是所有级别值都能在各分组中找到,unstack 操作可能会引入缺失数据

- stack 默认会滤除缺失数据,因此该运算是可逆的

# coding=utf-8import pandas as pdimport numpy as nps1 = pd.Series([0, 1, 2, 3], index=['a', 'b', 'c', 'd'])s2 = pd.Series([4, 5, 6], index=['c', 'd', 'e'])data2 = pd.concat([s1, s2], keys=['one','two'])print data2# 连接 -> 层次化索引的Series -> DataFrame(行:每个Series;列:Series的索引)print data2.unstack()'''one a 0 b 1 c 2 d 3two c 4 d 5 e 6dtype: int64 a b c d eone 0 1 2 3 NaNtwo NaN NaN 4 5 6[2 rows x 5 columns]'''print data2.unstack().stack()print data2.unstack().stack(dropna=False)'''one a 0 b 1 c 2 d 3two c 4 d 5 e 6dtype: float64one a 0 b 1 c 2 d 3 e NaNtwo a NaN b NaN c 4 d 5 e 6dtype: float64'''

- 转转轴级别最低

# 对DataFrame进行操作,旋转轴 的级别将会成为结果中最低级别df = pd.DataFrame( {'left': result, 'right': result + 5}, columns=pd.Index(['left', 'right'], name='side'))print df.unstack('state')print df.unstack('state').stack('side') (2)将“长格式”旋转为“宽格式”

- 将转换成我们需要的数据格式

- 关系型数据库中存储结构

# coding=utf-8import pandas as pdimport numpy as npdata = pd.read_csv("/home/peerslee/py/pydata/pydata-book-master/ch07/macrodata.csv")frame01 = pd.DataFrame(data, columns=['year', 'realgdp', 'infl', 'unemp'])path01 = '/home/peerslee/py/pydata/pydata-book-master/ch07/macrodata01.csv'frame01.to_csv(path01, index=False, header=False) # 将去除index 和 headernames02 = ['year', 'realgdp', 'infl', 'unemp']frame02 = pd.read_table(path01, sep=',', names=names02, index_col='year') # 将'year'列设置为行索引path02 = '/home/peerslee/py/pydata/pydata-book-master/ch07/macrodata02.csv'frame02.stack().to_csv(path02) # 轴向旋转之后写入文件中,year列会自动向后填充names03 = ['date','item','value']frame03 = pd.read_table(path02, sep=',', names=names03,)print frame03''' date item value0 1959 realgdp 2710.3491 1959 infl 0.0002 1959 unemp 5.8003 1959 realgdp 2778.8014 1959 infl 2.3405 1959 unemp 5.1006 1959 realgdp 2775.4887 1959 infl 2.7408 1959 unemp 5.3009 1959 realgdp 2785.20410 1959 infl 0.270'''result_path = '/home/peerslee/py/pydata/pydata-book-master/ch07/result_data.csv'frame03.to_csv(result_path) # 将数据保存起来 - 关系型数据库(例如mysql)中就是这样存储数据的

但是我在进行数据pivot 的时候,出现了错误: raise ValueError('Index contains duplicate entries, 'ValueError: Index contains duplicate entries, cannot reshape - 所以我们要将月份也加进来,但是我不知道,怎么搞,先放在这,以后再说(如果朋友哪位朋友有思路欢迎交流学习)

- 我们在操作上面的数据的时候没有那么轻松,我们常常要将数据转换为DataFrame 的格式,不同的item 值分别形成一列

# coding=utf-8import pandas as pdimport numpy as np"""DataFrame 中的pivot方法可以将“长格式”旋转为“宽格式”"""# 因为没有数据所以我们只能自己写点ldata = pd.DataFrame({'date':['03-31','03-31','03-31','06-30','06-30','06-30'], 'item':['real','infl','unemp','real','infl','unemp'], 'value':['2710.','000.','5.8','2778.','2.34','5.1'] })print ldata# 将date作为行索引的名字,item为列索引的名字,将value填充进去pivoted = ldata.pivot('date','item','value')print pivoted'''item infl real unempdate03-31 000. 2710. 5.806-30 2.34 2778. 5.1[2 rows x 3 columns]'''# 将需要重塑的列扩为两列ldata['value2'] = np.random.randn(len(ldata))print ldata# 忽略pivot的最后一个参数,会得到一个带有层次化的列pivoted = ldata.pivot('date','item')print pivoted''' value value2item infl real unemp infl real unempdate03-31 000. 2710. 5.8 1.059406 0.437246 0.10698706-30 2.34 2778. 5.1 -1.087665 -0.811100 -0.579266[2 rows x 6 columns]'''print pivoted['value'][:5]'''item infl real unempdate03-31 000. 2710. 5.806-30 2.34 2778. 5.1[2 rows x 3 columns]'''# 这个操作是完整的pivot 操作unstacked = ldata.set_index(['date','item']).unstack('item')print unstacked''' value value2item infl real unemp infl real unempdate03-31 000. 2710. 5.8 -1.018416 -1.476397 1.57915106-30 2.34 2778. 5.1 0.863437 1.606538 -1.147549[2 rows x 6 columns]''' 数据转换:

1.移除重复数据

# coding=utf-8import pandas as pdimport numpy as npdata = pd.DataFrame({'k1': ['one'] * 3 + ['two'] * 4, 'k2': [1, 1, 2, 3, 3, 4, 4]})print data''' k1 k20 one 11 one 12 one 23 two 34 two 35 two 46 two 4[7 rows x 2 columns]'''"""DataFrame 的duplicated 方法返回一个布尔型Series,表示各行是否是重复行"""print data.duplicated()'''0 False1 True2 False3 False4 True5 False6 Truedtype: bool'''"""DataFrame 的drop_duplicated 方法用于移除了重复行的DataFrame"""print data.drop_duplicates()''' k1 k20 one 12 one 23 two 35 two 4[4 rows x 2 columns]'''"""具体根据 某一列 来判断是否有重复值"""data['v1'] = range(7)print data.drop_duplicates(['k1'])''' k1 k2 v10 one 1 03 two 3 3[2 rows x 3 columns]'''"""默认保留的是第1个值,如果想保留最后一个值则传入 take_last=True"""print data.drop_duplicates(['k1','k2'], take_last=True)''' k1 k2 v11 one 1 12 one 2 24 two 3 46 two 4 6[4 rows x 3 columns]''' 2.利用函数或映射进行数据转换

# coding=utf-8import pandas as pdimport numpy as np# 一张食物和重量的表格data = pd.DataFrame({'food':['bacon','pulled pork','bacon','Pastrami','corned beef', 'Bacon','pastrami','honey ham','nova lox'], 'ounces':[4,3,12,6,7.5,8,3,5,6]})# 食物和动物的表格映射meat_to_ainmal = { 'bacon':'pig', 'pulled pork':'pig', 'pastrami':'cow', 'corned beef':'pig', 'nova lox':'salmon'}"""Series 的map 方法接受一个函数或含有映射关系的字典型对象"""# 这里我们先将表1 中的所有食物转换为小写,再做一个mapdata['animal'] = data['food'].map(str.lower).map(meat_to_ainmal)print data''' food ounces animal0 bacon 4.0 pig1 pulled pork 3.0 pig2 bacon 12.0 pig3 Pastrami 6.0 cow4 corned beef 7.5 pig5 Bacon 8.0 pig6 pastrami 3.0 cow7 honey ham 5.0 NaN8 nova lox 6.0 salmon[9 rows x 3 columns]'''"""我们可以传入一个能够完成全部这些工作的函数""" 3.替换值

# coding=utf-8import pandas as pdimport numpy as npdata = pd.Series([1., -999., 2., -999., -1000., 3.])"""我们要使用pandas 将-999 这样的数据替换成 NA 值"""data.replace(-999,np.nan)"""如果希望一次性替换多个值,可以传入一个由待替换值组成的列表以及一个替换值"""data.replace([-999,-1000],np.nan)"""如果希望对不同的值进行不同的替换,则传入一个由替换关系组成的列表即可"""data.replace([-999,-1000],[np.nan,0])"""传入的参数也可以是字典"""data.replace({-999:np.nan, -1000:0}) 4.重命名轴索引

# coding=utf-8import pandas as pdimport numpy as np"""轴标签可以通过函数或映射进行转换,从而得到一个新对象,轴还可以被就地修改"""data = pd.DataFrame(np.arange(12).reshape((3,4)), index=['Ohio','Colorado','New York',], columns=['one','two','three','four'])"""轴标签也有一个map 方法"""print data.index.map(str.upper)"""直接赋值给index"""data.index = data.index.map(str.upper)print data"""创建数据集的转换版本,而不是修改原始数据"""data.rename(index=str.title, columns=str.upper)"""rename 可以结合字典型对象实现对部分轴标签的更新"""data.rename(index={'OHIO':'INDIANA'}, columns={'three','peekaboo'})"""rename 也可以就地对DataFrame 进行修改,inplace=True"""_=data.rename(index={'OHIO':'INDIANA'},inplace=True) 转载地址:https://lipenglin.blog.csdn.net/article/details/51498713 如侵犯您的版权,请留言回复原文章的地址,我们会给您删除此文章,给您带来不便请您谅解!

发表评论

最新留言

路过按个爪印,很不错,赞一个!

[***.219.124.196]2024年04月11日 06时13分58秒

关于作者

喝酒易醉,品茶养心,人生如梦,品茶悟道,何以解忧?唯有杜康!

-- 愿君每日到此一游!

推荐文章

fmt在bss段(neepusec_easy_format)

2019-04-30

[double free] 9447 CTF : Search Engine

2019-04-30

python 函数式编程

2019-04-30

python编码

2019-04-30

scala maven plugin

2019-04-30

flink 1-个人理解

2019-04-30

redis cli

2019-04-30

redis api

2019-04-30

flink physical partition

2019-04-30

java 解析json

2019-04-30

java http请求

2019-04-30

tensorflow 数据格式

2019-04-30

tf rnn layer

2019-04-30

tf input layer

2019-04-30

tf model create

2019-04-30

tf dense layer两种创建方式的对比和numpy实现

2019-04-30

tf initializer

2019-04-30

tf 从RNN到BERT

2019-04-30

tf keras SimpleRNN源码解析

2019-04-30