APPRTC-Demo调用流程

1.CallActivity#onCreate 执行startCall开始连接或创建房间

2.WebSocketClient#connectToRoom 请求一次服务器

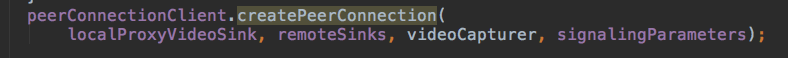

3.回调到CallActivity#onConnectToRoom 开始创建对等连接,同时将视频采集对象,本地和远程的VideoSink,相关参数传入

localProxyVideoSink代理本地视频渲染器

remoteSinks是代理远程视频的渲染器,这里是一个集合

videoCapture是本地视频采集器

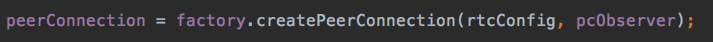

4.PeerConnectionClient#createPeerConnectionInternal 创建PeerConnection对象和创建视频轨道

factory是在CallActivity#onCreate中创建的

pcObserver是一个对等连接观察者,用于底层消息的回调

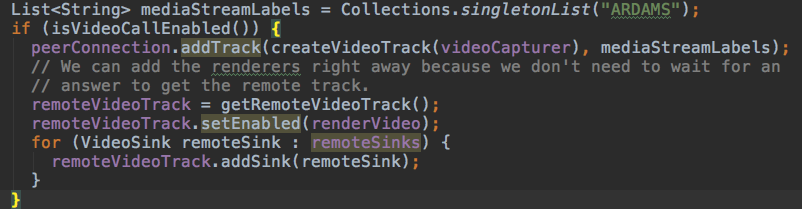

如果开启了视频功能,则将本地采集的数据添加到轨道(通过C++底层完成)

如果是远程的数据,通过(getRemoteVideoTrack调用C++底层方法)获取到远程视频轨道,添加传入进来的remoteSinks

这里继续添加音频轨道。

APPRTC的demo调用基本结束,到这里手机上就能预览出视频了。

下面具体分析这个VideoCapture怎么来的。

VideoCapture怎么来的(或者说如何采集数据的)

首先来一个参考文献

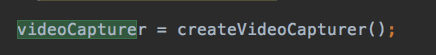

1.回到最初的起点CallActivity#onConnectedToRoomInternal

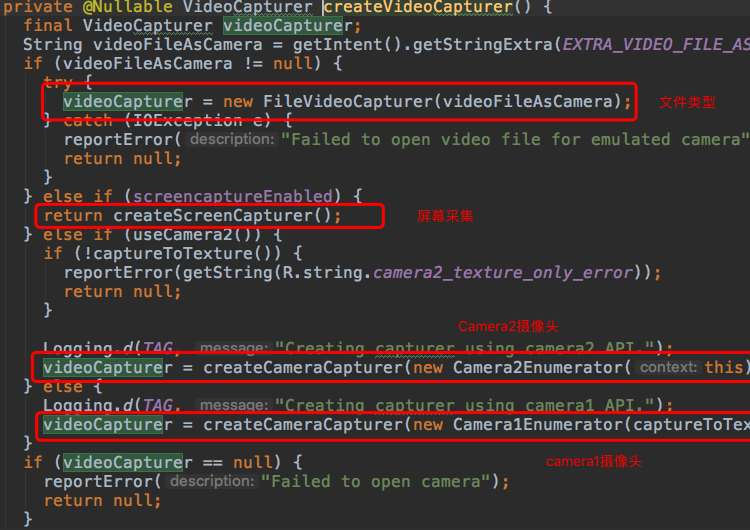

2.CallActivity#createVideoCapturer

webrtc针对视频采集对外主要提供了3个接口。

ScreenCapturerAndroid==>屏幕的视频来源采集

FileVideoCapturer==>文件的视频来源采集

CameraCapturer==>摄像头的视频来源采集 这里有分为Camera1Capturer和Camera2Capturer

反正返回一个VideoCapturer对象就ok啦。

下面进入如何创建摄像头采集器的方法体中。

3.CallActivity#createCameraCapturer

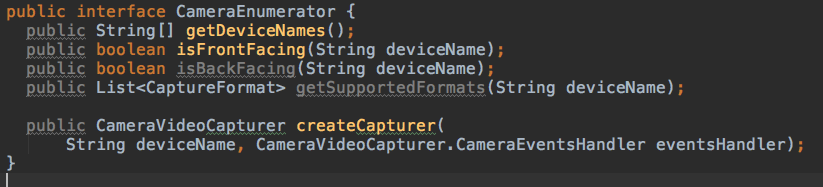

这里通过一个CameraEnumerator对象来创建视频采集器。

详细看一下这个CameraEnumerator接口。

这个对象可以获取到设备名,判断是否是前置或后置摄像头,还可以创建一个摄像头的视频采集器。

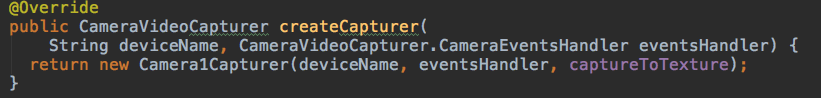

拿摄像头1的CamearEnumerator实现类为例。

#Camera1Enumerator#createCapturer

所以最终还是调用了Camera1Capturer来创建摄像头采集器。

下面分析Camera1Capturer实现过程。

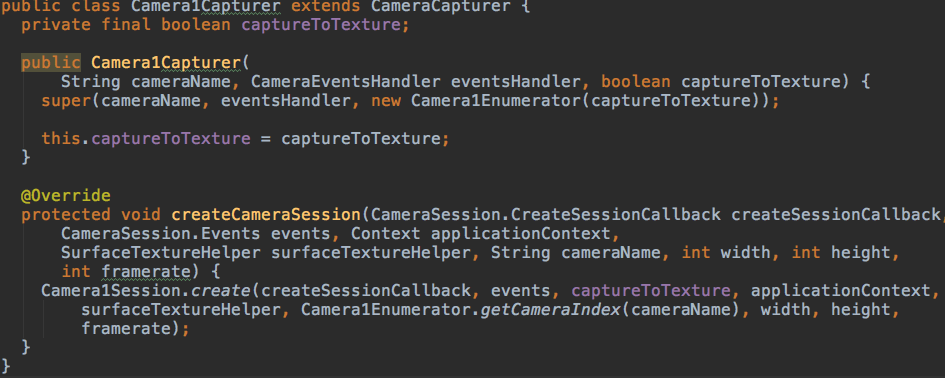

4.Camera1Capturer实现过程。

相机采集的实现是CameraCapturer

针对不同相机API又分为Camera1Capturer和Camera2Capturer。

相机采集的逻辑都封装在CameraCapturer中

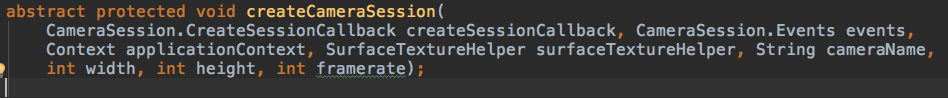

只有创建CameraSession的代码在两个子类中有不同的实现。

5.无奈,再进入CameraCapturer类中再次寻觅。

abstract class CameraCapturer implements CameraVideoCapturer { enum SwitchState { IDLE, // No switch requested. PENDING, // Waiting for previous capture session to open. IN_PROGRESS, // Waiting for new switched capture session to start. } private static final String TAG = "CameraCapturer"; private final static int MAX_OPEN_CAMERA_ATTEMPTS = 3; private final static int OPEN_CAMERA_DELAY_MS = 500; private final static int OPEN_CAMERA_TIMEOUT = 10000; private final CameraEnumerator cameraEnumerator; @Nullable private final CameraEventsHandler eventsHandler; private final Handler uiThreadHandler; @Nullable private final CameraSession.CreateSessionCallback createSessionCallback = new CameraSession.CreateSessionCallback() { @Override public void onDone(CameraSession session) { checkIsOnCameraThread(); Logging.d(TAG, "Create session done. Switch state: " + switchState); uiThreadHandler.removeCallbacks(openCameraTimeoutRunnable); synchronized (stateLock) { capturerObserver.onCapturerStarted(true /* success */); sessionOpening = false; currentSession = session; cameraStatistics = new CameraStatistics(surfaceHelper, eventsHandler); firstFrameObserved = false; stateLock.notifyAll(); if (switchState == SwitchState.IN_PROGRESS) { if (switchEventsHandler != null) { switchEventsHandler.onCameraSwitchDone(cameraEnumerator.isFrontFacing(cameraName)); switchEventsHandler = null; } switchState = SwitchState.IDLE; } else if (switchState == SwitchState.PENDING) { switchState = SwitchState.IDLE; switchCameraInternal(switchEventsHandler); } } } @Override public void onFailure(CameraSession.FailureType failureType, String error) { checkIsOnCameraThread(); uiThreadHandler.removeCallbacks(openCameraTimeoutRunnable); synchronized (stateLock) { capturerObserver.onCapturerStarted(false /* success */); openAttemptsRemaining--; if (openAttemptsRemaining <= 0) { Logging.w(TAG, "Opening camera failed, passing: " + error); sessionOpening = false; stateLock.notifyAll(); if (switchState != SwitchState.IDLE) { if (switchEventsHandler != null) { switchEventsHandler.onCameraSwitchError(error); switchEventsHandler = null; } switchState = SwitchState.IDLE; } if (failureType == CameraSession.FailureType.DISCONNECTED) { eventsHandler.onCameraDisconnected(); } else { eventsHandler.onCameraError(error); } } else { Logging.w(TAG, "Opening camera failed, retry: " + error); createSessionInternal(OPEN_CAMERA_DELAY_MS); } } } }; @Nullable private final CameraSession.Events cameraSessionEventsHandler = new CameraSession.Events() { @Override public void onCameraOpening() { checkIsOnCameraThread(); synchronized (stateLock) { if (currentSession != null) { Logging.w(TAG, "onCameraOpening while session was open."); return; } eventsHandler.onCameraOpening(cameraName); } } @Override public void onCameraError(CameraSession session, String error) { checkIsOnCameraThread(); synchronized (stateLock) { if (session != currentSession) { Logging.w(TAG, "onCameraError from another session: " + error); return; } eventsHandler.onCameraError(error); stopCapture(); } } @Override public void onCameraDisconnected(CameraSession session) { checkIsOnCameraThread(); synchronized (stateLock) { if (session != currentSession) { Logging.w(TAG, "onCameraDisconnected from another session."); return; } eventsHandler.onCameraDisconnected(); stopCapture(); } } @Override public void onCameraClosed(CameraSession session) { checkIsOnCameraThread(); synchronized (stateLock) { if (session != currentSession && currentSession != null) { Logging.d(TAG, "onCameraClosed from another session."); return; } eventsHandler.onCameraClosed(); } } @Override public void onFrameCaptured(CameraSession session, VideoFrame frame) { checkIsOnCameraThread(); synchronized (stateLock) { if (session != currentSession) { Logging.w(TAG, "onFrameCaptured from another session."); return; } if (!firstFrameObserved) { eventsHandler.onFirstFrameAvailable(); firstFrameObserved = true; } cameraStatistics.addFrame(); capturerObserver.onFrameCaptured(frame); } } }; private final Runnable openCameraTimeoutRunnable = new Runnable() { @Override public void run() { eventsHandler.onCameraError("Camera failed to start within timeout."); } }; // Initialized on initialize // ------------------------- @Nullable private Handler cameraThreadHandler; private Context applicationContext; private CapturerObserver capturerObserver; @Nullable private SurfaceTextureHelper surfaceHelper; private final Object stateLock = new Object(); private boolean sessionOpening; /* guarded by stateLock */ @Nullable private CameraSession currentSession; /* guarded by stateLock */ private String cameraName; /* guarded by stateLock */ private int width; /* guarded by stateLock */ private int height; /* guarded by stateLock */ private int framerate; /* guarded by stateLock */ private int openAttemptsRemaining; /* guarded by stateLock */ private SwitchState switchState = SwitchState.IDLE; /* guarded by stateLock */ @Nullable private CameraSwitchHandler switchEventsHandler; /* guarded by stateLock */ // Valid from onDone call until stopCapture, otherwise null. @Nullable private CameraStatistics cameraStatistics; /* guarded by stateLock */ private boolean firstFrameObserved; /* guarded by stateLock */ public CameraCapturer(String cameraName, @Nullable CameraEventsHandler eventsHandler, CameraEnumerator cameraEnumerator) { if (eventsHandler == null) { eventsHandler = new CameraEventsHandler() { @Override public void onCameraError(String errorDescription) {} @Override public void onCameraDisconnected() {} @Override public void onCameraFreezed(String errorDescription) {} @Override public void onCameraOpening(String cameraName) {} @Override public void onFirstFrameAvailable() {} @Override public void onCameraClosed() {} }; } this.eventsHandler = eventsHandler; this.cameraEnumerator = cameraEnumerator; this.cameraName = cameraName; uiThreadHandler = new Handler(Looper.getMainLooper()); final String[] deviceNames = cameraEnumerator.getDeviceNames(); if (deviceNames.length == 0) { throw new RuntimeException("No cameras attached."); } if (!Arrays.asList(deviceNames).contains(this.cameraName)) { throw new IllegalArgumentException( "Camera name " + this.cameraName + " does not match any known camera device."); } } @Override public void initialize(@Nullable SurfaceTextureHelper surfaceTextureHelper, Context applicationContext, CapturerObserver capturerObserver) { this.applicationContext = applicationContext; this.capturerObserver = capturerObserver; this.surfaceHelper = surfaceTextureHelper; this.cameraThreadHandler = surfaceTextureHelper == null ? null : surfaceTextureHelper.getHandler(); } @Override public void startCapture(int width, int height, int framerate) { Logging.d(TAG, "startCapture: " + width + "x" + height + "@" + framerate); if (applicationContext == null) { throw new RuntimeException("CameraCapturer must be initialized before calling startCapture."); } synchronized (stateLock) { if (sessionOpening || currentSession != null) { Logging.w(TAG, "Session already open"); return; } this.width = width; this.height = height; this.framerate = framerate; sessionOpening = true; openAttemptsRemaining = MAX_OPEN_CAMERA_ATTEMPTS; createSessionInternal(0); } } private void createSessionInternal(int delayMs) { uiThreadHandler.postDelayed(openCameraTimeoutRunnable, delayMs + OPEN_CAMERA_TIMEOUT); cameraThreadHandler.postDelayed(new Runnable() { @Override public void run() { createCameraSession(createSessionCallback, cameraSessionEventsHandler, applicationContext, surfaceHelper, cameraName, width, height, framerate); } }, delayMs); } @Override public void stopCapture() { Logging.d(TAG, "Stop capture"); synchronized (stateLock) { while (sessionOpening) { Logging.d(TAG, "Stop capture: Waiting for session to open"); try { stateLock.wait(); } catch (InterruptedException e) { Logging.w(TAG, "Stop capture interrupted while waiting for the session to open."); Thread.currentThread().interrupt(); return; } } if (currentSession != null) { Logging.d(TAG, "Stop capture: Nulling session"); cameraStatistics.release(); cameraStatistics = null; final CameraSession oldSession = currentSession; cameraThreadHandler.post(new Runnable() { @Override public void run() { oldSession.stop(); } }); currentSession = null; capturerObserver.onCapturerStopped(); } else { Logging.d(TAG, "Stop capture: No session open"); } } Logging.d(TAG, "Stop capture done"); } @Override public void changeCaptureFormat(int width, int height, int framerate) { Logging.d(TAG, "changeCaptureFormat: " + width + "x" + height + "@" + framerate); synchronized (stateLock) { stopCapture(); startCapture(width, height, framerate); } } @Override public void dispose() { Logging.d(TAG, "dispose"); stopCapture(); } @Override public void switchCamera(final CameraSwitchHandler switchEventsHandler) { Logging.d(TAG, "switchCamera"); cameraThreadHandler.post(new Runnable() { @Override public void run() { switchCameraInternal(switchEventsHandler); } }); } @Override public boolean isScreencast() { return false; } public void printStackTrace() { Thread cameraThread = null; if (cameraThreadHandler != null) { cameraThread = cameraThreadHandler.getLooper().getThread(); } if (cameraThread != null) { StackTraceElement[] cameraStackTrace = cameraThread.getStackTrace(); if (cameraStackTrace.length > 0) { Logging.d(TAG, "CameraCapturer stack trace:"); for (StackTraceElement traceElem : cameraStackTrace) { Logging.d(TAG, traceElem.toString()); } } } } private void reportCameraSwitchError( String error, @Nullable CameraSwitchHandler switchEventsHandler) { Logging.e(TAG, error); if (switchEventsHandler != null) { switchEventsHandler.onCameraSwitchError(error); } } private void switchCameraInternal(@Nullable final CameraSwitchHandler switchEventsHandler) { Logging.d(TAG, "switchCamera internal"); final String[] deviceNames = cameraEnumerator.getDeviceNames(); if (deviceNames.length < 2) { if (switchEventsHandler != null) { switchEventsHandler.onCameraSwitchError("No camera to switch to."); } return; } synchronized (stateLock) { if (switchState != SwitchState.IDLE) { reportCameraSwitchError("Camera switch already in progress.", switchEventsHandler); return; } if (!sessionOpening && currentSession == null) { reportCameraSwitchError("switchCamera: camera is not running.", switchEventsHandler); return; } this.switchEventsHandler = switchEventsHandler; if (sessionOpening) { switchState = SwitchState.PENDING; return; } else { switchState = SwitchState.IN_PROGRESS; } Logging.d(TAG, "switchCamera: Stopping session"); cameraStatistics.release(); cameraStatistics = null; final CameraSession oldSession = currentSession; cameraThreadHandler.post(new Runnable() { @Override public void run() { oldSession.stop(); } }); currentSession = null; int cameraNameIndex = Arrays.asList(deviceNames).indexOf(cameraName); cameraName = deviceNames[(cameraNameIndex + 1) % deviceNames.length]; sessionOpening = true; openAttemptsRemaining = 1; createSessionInternal(0); } Logging.d(TAG, "switchCamera done"); } private void checkIsOnCameraThread() { if (Thread.currentThread() != cameraThreadHandler.getLooper().getThread()) { Logging.e(TAG, "Check is on camera thread failed."); throw new RuntimeException("Not on camera thread."); } } protected String getCameraName() { synchronized (stateLock) { return cameraName; } } abstract protected void createCameraSession( CameraSession.CreateSessionCallback createSessionCallback, CameraSession.Events events, Context applicationContext, SurfaceTextureHelper surfaceTextureHelper, String cameraName, int width, int height, int framerate);} 哎呀,这是一个抽象类,把最关键的createCameraSession交给子类去实现了。

所以又要回到CameraCapturer的子类中看看具体实现方案。

6.如上图的第4点中的Camera1Capturer#createCameraSession

原来源头是CameraSession。

由它来采集视频流的。

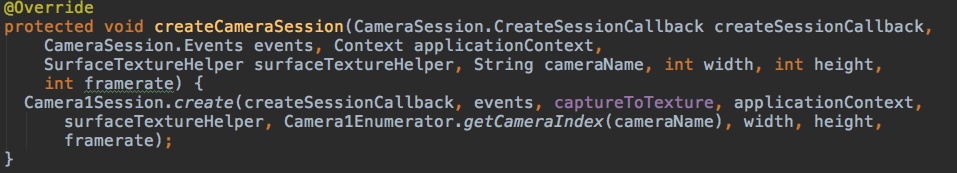

7.首先看看CameraSession接口。

主要有两个回调类。

一个创建Session的回调,正在创建onDone和创建失败onFailure。

一个是事件回调,摄像头打开,失败,断开连接,关闭,采集。

停止采集stop()。

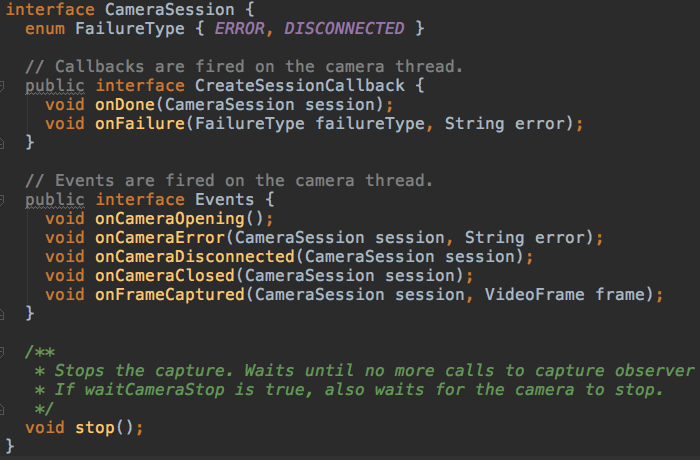

8.再来看看CameraSession的实现类,如Camera1Session。

class Camera1Session implements CameraSession { private static final String TAG = "Camera1Session"; private static final int NUMBER_OF_CAPTURE_BUFFERS = 3; private static final Histogram camera1StartTimeMsHistogram = Histogram.createCounts("WebRTC.Android.Camera1.StartTimeMs", 1, 10000, 50); private static final Histogram camera1StopTimeMsHistogram = Histogram.createCounts("WebRTC.Android.Camera1.StopTimeMs", 1, 10000, 50); private static final Histogram camera1ResolutionHistogram = Histogram.createEnumeration( "WebRTC.Android.Camera1.Resolution", CameraEnumerationAndroid.COMMON_RESOLUTIONS.size()); private static enum SessionState { RUNNING, STOPPED } private final Handler cameraThreadHandler; private final Events events; private final boolean captureToTexture; private final Context applicationContext; private final SurfaceTextureHelper surfaceTextureHelper; private final int cameraId; private final android.hardware.Camera camera; private final android.hardware.Camera.CameraInfo info; private final CaptureFormat captureFormat; // Used only for stats. Only used on the camera thread. private final long constructionTimeNs; // Construction time of this class. private SessionState state; private boolean firstFrameReported = false; // TODO(titovartem) make correct fix during webrtc:9175 @SuppressWarnings("ByteBufferBackingArray") public static void create(final CreateSessionCallback callback, final Events events, final boolean captureToTexture, final Context applicationContext, final SurfaceTextureHelper surfaceTextureHelper, final int cameraId, final int width, final int height, final int framerate) { final long constructionTimeNs = System.nanoTime(); Logging.d(TAG, "Open camera " + cameraId); events.onCameraOpening(); final android.hardware.Camera camera; try { camera = android.hardware.Camera.open(cameraId); } catch (RuntimeException e) { callback.onFailure(FailureType.ERROR, e.getMessage()); return; } if (camera == null) { callback.onFailure(FailureType.ERROR, "android.hardware.Camera.open returned null for camera id = " + cameraId); return; } try { camera.setPreviewTexture(surfaceTextureHelper.getSurfaceTexture()); } catch (IOException | RuntimeException e) { camera.release(); callback.onFailure(FailureType.ERROR, e.getMessage()); return; } final android.hardware.Camera.CameraInfo info = new android.hardware.Camera.CameraInfo(); android.hardware.Camera.getCameraInfo(cameraId, info); final CaptureFormat captureFormat; try { final android.hardware.Camera.Parameters parameters = camera.getParameters(); captureFormat = findClosestCaptureFormat(parameters, width, height, framerate); final Size pictureSize = findClosestPictureSize(parameters, width, height); updateCameraParameters(camera, parameters, captureFormat, pictureSize, captureToTexture); } catch (RuntimeException e) { camera.release(); callback.onFailure(FailureType.ERROR, e.getMessage()); return; } if (!captureToTexture) { final int frameSize = captureFormat.frameSize(); for (int i = 0; i < NUMBER_OF_CAPTURE_BUFFERS; ++i) { final ByteBuffer buffer = ByteBuffer.allocateDirect(frameSize); camera.addCallbackBuffer(buffer.array()); } } // Calculate orientation manually and send it as CVO insted. camera.setDisplayOrientation(0 /* degrees */); callback.onDone(new Camera1Session(events, captureToTexture, applicationContext, surfaceTextureHelper, cameraId, camera, info, captureFormat, constructionTimeNs)); } private static void updateCameraParameters(android.hardware.Camera camera, android.hardware.Camera.Parameters parameters, CaptureFormat captureFormat, Size pictureSize, boolean captureToTexture) { final List focusModes = parameters.getSupportedFocusModes(); parameters.setPreviewFpsRange(captureFormat.framerate.min, captureFormat.framerate.max); parameters.setPreviewSize(captureFormat.width, captureFormat.height); parameters.setPictureSize(pictureSize.width, pictureSize.height); if (!captureToTexture) { parameters.setPreviewFormat(captureFormat.imageFormat); } if (parameters.isVideoStabilizationSupported()) { parameters.setVideoStabilization(true); } if (focusModes.contains(android.hardware.Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO)) { parameters.setFocusMode(android.hardware.Camera.Parameters.FOCUS_MODE_CONTINUOUS_VIDEO); } camera.setParameters(parameters); } private static CaptureFormat findClosestCaptureFormat( android.hardware.Camera.Parameters parameters, int width, int height, int framerate) { // Find closest supported format for |width| x |height| @ |framerate|. final List supportedFramerates = Camera1Enumerator.convertFramerates(parameters.getSupportedPreviewFpsRange()); Logging.d(TAG, "Available fps ranges: " + supportedFramerates); final CaptureFormat.FramerateRange fpsRange = CameraEnumerationAndroid.getClosestSupportedFramerateRange(supportedFramerates, framerate); final Size previewSize = CameraEnumerationAndroid.getClosestSupportedSize( Camera1Enumerator.convertSizes(parameters.getSupportedPreviewSizes()), width, height); CameraEnumerationAndroid.reportCameraResolution(camera1ResolutionHistogram, previewSize); return new CaptureFormat(previewSize.width, previewSize.height, fpsRange); } private static Size findClosestPictureSize( android.hardware.Camera.Parameters parameters, int width, int height) { return CameraEnumerationAndroid.getClosestSupportedSize( Camera1Enumerator.convertSizes(parameters.getSupportedPictureSizes()), width, height); } private Camera1Session(Events events, boolean captureToTexture, Context applicationContext, SurfaceTextureHelper surfaceTextureHelper, int cameraId, android.hardware.Camera camera, android.hardware.Camera.CameraInfo info, CaptureFormat captureFormat, long constructionTimeNs) { Logging.d(TAG, "Create new camera1 session on camera " + cameraId); this.cameraThreadHandler = new Handler(); this.events = events; this.captureToTexture = captureToTexture; this.applicationContext = applicationContext; this.surfaceTextureHelper = surfaceTextureHelper; this.cameraId = cameraId; this.camera = camera; this.info = info; this.captureFormat = captureFormat; this.constructionTimeNs = constructionTimeNs; startCapturing(); } @Override public void stop() { Logging.d(TAG, "Stop camera1 session on camera " + cameraId); checkIsOnCameraThread(); if (state != SessionState.STOPPED) { final long stopStartTime = System.nanoTime(); stopInternal(); final int stopTimeMs = (int) TimeUnit.NANOSECONDS.toMillis(System.nanoTime() - stopStartTime); camera1StopTimeMsHistogram.addSample(stopTimeMs); } } private void startCapturing() { Logging.d(TAG, "Start capturing"); checkIsOnCameraThread(); state = SessionState.RUNNING; camera.setErrorCallback(new android.hardware.Camera.ErrorCallback() { @Override public void onError(int error, android.hardware.Camera camera) { String errorMessage; if (error == android.hardware.Camera.CAMERA_ERROR_SERVER_DIED) { errorMessage = "Camera server died!"; } else { errorMessage = "Camera error: " + error; } Logging.e(TAG, errorMessage); stopInternal(); if (error == android.hardware.Camera.CAMERA_ERROR_EVICTED) { events.onCameraDisconnected(Camera1Session.this); } else { events.onCameraError(Camera1Session.this, errorMessage); } } }); if (captureToTexture) { listenForTextureFrames(); } else { listenForBytebufferFrames(); } try { camera.startPreview(); } catch (RuntimeException e) { stopInternal(); events.onCameraError(this, e.getMessage()); } } private void stopInternal() { Logging.d(TAG, "Stop internal"); checkIsOnCameraThread(); if (state == SessionState.STOPPED) { Logging.d(TAG, "Camera is already stopped"); return; } state = SessionState.STOPPED; surfaceTextureHelper.stopListening(); // Note: stopPreview or other driver code might deadlock. Deadlock in // android.hardware.Camera._stopPreview(Native Method) has been observed on // Nexus 5 (hammerhead), OS version LMY48I. camera.stopPreview(); camera.release(); events.onCameraClosed(this); Logging.d(TAG, "Stop done"); } private void listenForTextureFrames() { surfaceTextureHelper.startListening(new SurfaceTextureHelper.OnTextureFrameAvailableListener() { @Override public void onTextureFrameAvailable( int oesTextureId, float[] transformMatrix, long timestampNs) { checkIsOnCameraThread(); if (state != SessionState.RUNNING) { Logging.d(TAG, "Texture frame captured but camera is no longer running."); surfaceTextureHelper.returnTextureFrame(); return; } if (!firstFrameReported) { final int startTimeMs = (int) TimeUnit.NANOSECONDS.toMillis(System.nanoTime() - constructionTimeNs); camera1StartTimeMsHistogram.addSample(startTimeMs); firstFrameReported = true; } int rotation = getFrameOrientation(); if (info.facing == android.hardware.Camera.CameraInfo.CAMERA_FACING_FRONT) { // Undo the mirror that the OS "helps" us with. // http://developer.android.com/reference/android/hardware/Camera.html#setDisplayOrientation(int) transformMatrix = RendererCommon.multiplyMatrices( transformMatrix, RendererCommon.horizontalFlipMatrix()); } final VideoFrame.Buffer buffer = surfaceTextureHelper.createTextureBuffer(captureFormat.width, captureFormat.height, RendererCommon.convertMatrixToAndroidGraphicsMatrix(transformMatrix)); final VideoFrame frame = new VideoFrame(buffer, rotation, timestampNs); events.onFrameCaptured(Camera1Session.this, frame); frame.release(); } }); } private void listenForBytebufferFrames() { camera.setPreviewCallbackWithBuffer(new android.hardware.Camera.PreviewCallback() { @Override public void onPreviewFrame(final byte[] data, android.hardware.Camera callbackCamera) { checkIsOnCameraThread(); if (callbackCamera != camera) { Logging.e(TAG, "Callback from a different camera. This should never happen."); return; } if (state != SessionState.RUNNING) { Logging.d(TAG, "Bytebuffer frame captured but camera is no longer running."); return; } final long captureTimeNs = TimeUnit.MILLISECONDS.toNanos(SystemClock.elapsedRealtime()); if (!firstFrameReported) { final int startTimeMs = (int) TimeUnit.NANOSECONDS.toMillis(System.nanoTime() - constructionTimeNs); camera1StartTimeMsHistogram.addSample(startTimeMs); firstFrameReported = true; } VideoFrame.Buffer frameBuffer = new NV21Buffer( data, captureFormat.width, captureFormat.height, () -> cameraThreadHandler.post(() -> { if (state == SessionState.RUNNING) { camera.addCallbackBuffer(data); } })); final VideoFrame frame = new VideoFrame(frameBuffer, getFrameOrientation(), captureTimeNs); events.onFrameCaptured(Camera1Session.this, frame); frame.release(); } }); } private int getDeviceOrientation() { int orientation = 0; WindowManager wm = (WindowManager) applicationContext.getSystemService(Context.WINDOW_SERVICE); switch (wm.getDefaultDisplay().getRotation()) { case Surface.ROTATION_90: orientation = 90; break; case Surface.ROTATION_180: orientation = 180; break; case Surface.ROTATION_270: orientation = 270; break; case Surface.ROTATION_0: default: orientation = 0; break; } return orientation; } private int getFrameOrientation() { int rotation = getDeviceOrientation(); if (info.facing == android.hardware.Camera.CameraInfo.CAMERA_FACING_BACK) { rotation = 360 - rotation; } return (info.orientation + rotation) % 360; } private void checkIsOnCameraThread() { if (Thread.currentThread() != cameraThreadHandler.getLooper().getThread()) { throw new IllegalStateException("Wrong thread"); } }} 再Camera1Session#create中会创建一个Camera1Session对象。

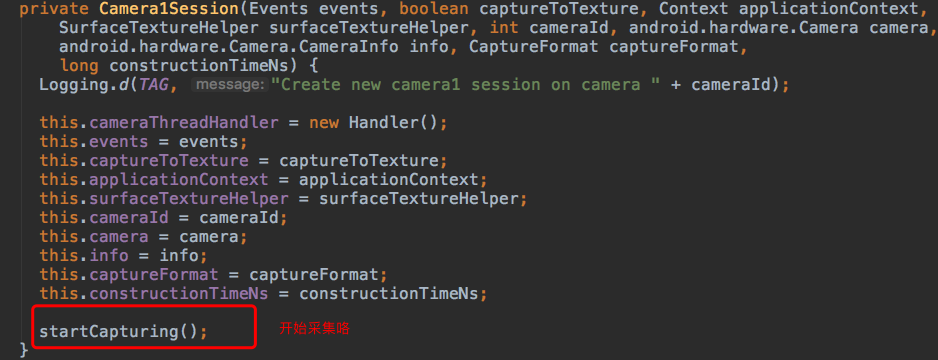

直接在构造函数中开始采集。

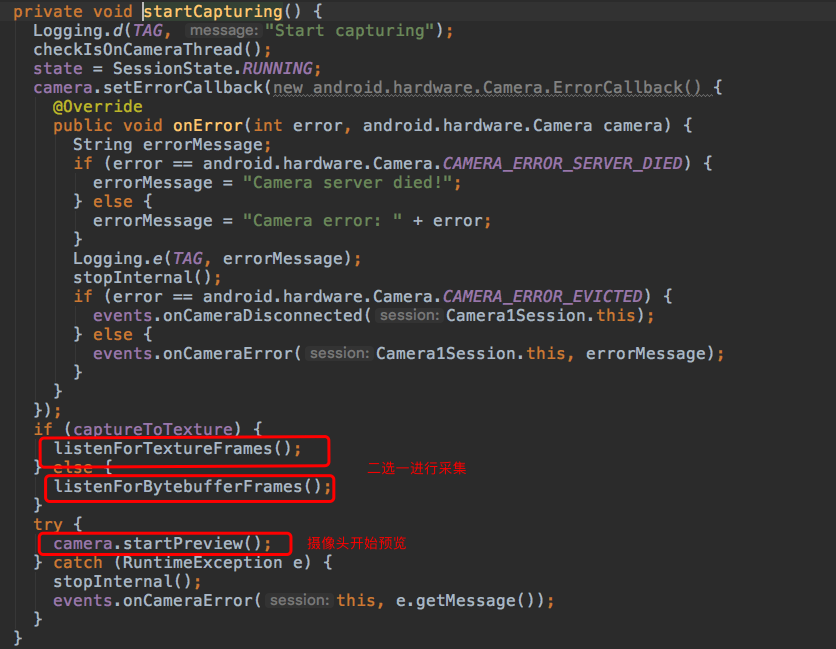

Camera1Session#startCapturing具体怎么做的呢?

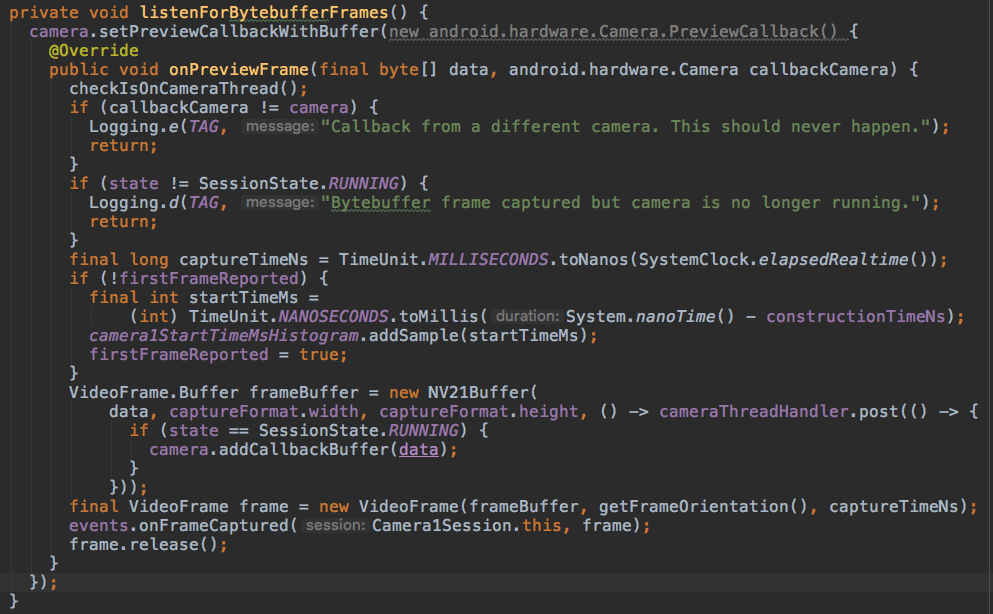

再进入Camera1Session#listenForBytebufferFrames看看是什么情况。

原来这里才是真正进行采集的地方。

Camera#setPreviewCallbackWithBuffer,将相机预览的数据填充到一个缓冲区。

然后将预览的二进制数据添加到一个VideoFrame.Buffer中。这里采用的是NV21Buffer来处理该过程的。

最后通过event一路回调到Camera1Capture中去了,随便把frame也带过去了。